Compare commits

147 commits

py3-latest

...

zeronet-en

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

0aed438ae5 | ||

|

|

ec8203fff7 | ||

|

|

45b92157cf | ||

|

|

5e61fe8b06 | ||

|

|

90b6cb9cdf | ||

|

|

1362b7e2e6 | ||

|

|

00061c45a5 | ||

|

|

7fef6dde0e | ||

|

|

c594e63512 | ||

|

|

36adb63f61 | ||

|

|

59f7d3221f | ||

|

|

b39a1a5f1b | ||

|

|

f2324b8eb4 | ||

|

|

912688dbbd | ||

|

|

f2e1c2ad81 | ||

|

|

62f88a11a7 | ||

|

|

7dbf8da8cb | ||

|

|

f79aca1dad | ||

|

|

7575e2d455 | ||

|

|

58b2d92351 | ||

|

|

86a73e4665 | ||

|

|

18da16e8d4 | ||

|

|

523e399d8c | ||

|

|

643dd84a28 | ||

|

|

4448358247 | ||

|

|

0e48004563 | ||

|

|

0bbf19aab9 | ||

|

|

545fe9442c | ||

|

|

5c8bbe5801 | ||

|

|

348a4b0865 | ||

|

|

f7372fc393 | ||

|

|

b7a3aa37e1 | ||

|

|

b6b23d0e8e | ||

|

|

e000eae046 | ||

|

|

1863043505 | ||

|

|

d32d9f781b | ||

|

|

168c436b73 | ||

|

|

77e0bb3650 | ||

|

|

ef69dcd331 | ||

|

|

32eb47c482 | ||

|

|

f484c0a1b8 | ||

|

|

645f3ba34a | ||

|

|

93a95f511a | ||

|

|

dff52d691a | ||

|

|

1a8d30146e | ||

|

|

8f908c961d | ||

|

|

ce971ab738 | ||

|

|

1fd1f47a94 | ||

|

|

b512c54f75 | ||

|

|

b4f94e5022 | ||

|

|

e612f93631 | ||

|

|

fe24e17baa | ||

|

|

1b68182a76 | ||

|

|

1ef129bdf9 | ||

|

|

19b840defd | ||

|

|

e3daa09316 | ||

|

|

77d2d69376 | ||

|

|

c36cba7980 | ||

|

|

ddc4861223 | ||

|

|

cd3262a2a7 | ||

|

|

b194eb0f33 | ||

|

|

5744e40505 | ||

|

|

5ec970adb8 | ||

|

|

75bba6ca1a | ||

|

|

7e438a90e1 | ||

|

|

46bea95002 | ||

|

|

2a25d61b96 | ||

|

|

ba6295f793 | ||

|

|

23ef37374b | ||

|

|

488fd4045e | ||

|

|

769a2c08dd | ||

|

|

d2b65c550c | ||

|

|

d5652eaa51 | ||

|

|

164f5199a9 | ||

|

|

72e5d3df64 | ||

|

|

1c73d1a095 | ||

|

|

b9ec7124f9 | ||

|

|

abbd2c51f0 | ||

|

|

be65ff2c40 | ||

|

|

c772592c4a | ||

|

|

5d6fe6a631 | ||

|

|

adaeedf4d8 | ||

|

|

8474abc967 | ||

|

|

986dedfa7f | ||

|

|

0151546329 | ||

|

|

d0069471b8 | ||

|

|

1144964062 | ||

|

|

6c8849139f | ||

|

|

3677684971 | ||

|

|

4e27e300e3 | ||

|

|

697b12d808 | ||

|

|

570f854485 | ||

|

|

5d5b3684cc | ||

|

|

7354d712e0 | ||

|

|

6c8b059f57 | ||

|

|

27ce79f044 | ||

|

|

90d01e6004 | ||

|

|

325f071329 | ||

|

|

c84b413f58 | ||

|

|

ba16fdcae9 | ||

|

|

ea21b32b93 | ||

|

|

e8358ee8f2 | ||

|

|

d1b9cc8261 | ||

|

|

829fd46781 | ||

|

|

adf40dbb6b | ||

|

|

8fd88c50f9 | ||

|

|

511a90a5c5 | ||

|

|

3ca323f8b0 | ||

|

|

112c778c28 | ||

|

|

f1d91989d5 | ||

|

|

b6ae96db5a | ||

|

|

3d68a25e13 | ||

|

|

d4239d16f9 | ||

|

|

b0005026b4 | ||

|

|

1f34f477ef | ||

|

|

e33a54bc65 | ||

|

|

920ddd944f | ||

|

|

f9706e3dc4 | ||

|

|

822e53ebb8 | ||

|

|

5f6589cfc2 | ||

|

|

95c8f0e97e | ||

|

|

cb363d2f11 | ||

|

|

0d02c3c4da | ||

|

|

2811d7c9d4 | ||

|

|

96e935300c | ||

|

|

2e1b0e093f | ||

|

|

fee63a1ed2 | ||

|

|

142f5862df | ||

|

|

de5a9ff67b | ||

|

|

d57deaa8e4 | ||

|

|

eb6d0c9644 | ||

|

|

a36b2c9241 | ||

|

|

9a8519b487 | ||

|

|

f4708d9781 | ||

|

|

b7550474a5 | ||

|

|

735061b79d | ||

|

|

aa6d7a468d | ||

|

|

f5b63a430c | ||

|

|

6ee1db4197 | ||

|

|

37627822de | ||

|

|

d35a15d674 | ||

|

|

c8545ce054 | ||

|

|

8f8e10a703 | ||

|

|

33c81a89e9 | ||

|

|

84526a6657 | ||

|

|

3910338b28 | ||

|

|

b2e92b1d10 |

61 changed files with 3365 additions and 1409 deletions

|

|

@ -1,40 +0,0 @@

|

|||

name: Build Docker Image on Commit

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

tags:

|

||||

- '!' # Exclude tags

|

||||

|

||||

jobs:

|

||||

build-and-publish:

|

||||

runs-on: docker-builder

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set REPO_VARS

|

||||

id: repo-url

|

||||

run: |

|

||||

echo "REPO_HOST=$(echo "${{ github.server_url }}" | sed 's~http[s]*://~~g')" >> $GITHUB_ENV

|

||||

echo "REPO_PATH=${{ github.repository }}" >> $GITHUB_ENV

|

||||

|

||||

- name: Login to OCI registry

|

||||

run: |

|

||||

echo "${{ secrets.OCI_TOKEN }}" | docker login $REPO_HOST -u "${{ secrets.OCI_USER }}" --password-stdin

|

||||

|

||||

- name: Build and push Docker images

|

||||

run: |

|

||||

# Build Docker image with commit SHA

|

||||

docker build -t $REPO_HOST/$REPO_PATH:${{ github.sha }} .

|

||||

docker push $REPO_HOST/$REPO_PATH:${{ github.sha }}

|

||||

|

||||

# Build Docker image with nightly tag

|

||||

docker tag $REPO_HOST/$REPO_PATH:${{ github.sha }} $REPO_HOST/$REPO_PATH:nightly

|

||||

docker push $REPO_HOST/$REPO_PATH:nightly

|

||||

|

||||

# Remove local images to save storage

|

||||

docker rmi $REPO_HOST/$REPO_PATH:${{ github.sha }}

|

||||

docker rmi $REPO_HOST/$REPO_PATH:nightly

|

||||

|

|

@ -1,37 +0,0 @@

|

|||

name: Build and Publish Docker Image on Tag

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

|

||||

jobs:

|

||||

build-and-publish:

|

||||

runs-on: docker-builder

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set REPO_VARS

|

||||

id: repo-url

|

||||

run: |

|

||||

echo "REPO_HOST=$(echo "${{ github.server_url }}" | sed 's~http[s]*://~~g')" >> $GITHUB_ENV

|

||||

echo "REPO_PATH=${{ github.repository }}" >> $GITHUB_ENV

|

||||

|

||||

- name: Login to OCI registry

|

||||

run: |

|

||||

echo "${{ secrets.OCI_TOKEN }}" | docker login $REPO_HOST -u "${{ secrets.OCI_USER }}" --password-stdin

|

||||

|

||||

- name: Build and push Docker image

|

||||

run: |

|

||||

TAG=${{ github.ref_name }} # Get the tag name from the context

|

||||

# Build and push multi-platform Docker images

|

||||

docker build -t $REPO_HOST/$REPO_PATH:$TAG --push .

|

||||

# Tag and push latest

|

||||

docker tag $REPO_HOST/$REPO_PATH:$TAG $REPO_HOST/$REPO_PATH:latest

|

||||

docker push $REPO_HOST/$REPO_PATH:latest

|

||||

|

||||

# Remove the local image to save storage

|

||||

docker rmi $REPO_HOST/$REPO_PATH:$TAG

|

||||

docker rmi $REPO_HOST/$REPO_PATH:latest

|

||||

11

.github/FUNDING.yml

vendored

11

.github/FUNDING.yml

vendored

|

|

@ -1,10 +1 @@

|

|||

github: canewsin

|

||||

patreon: # Replace with a single Patreon username e.g., user1

|

||||

open_collective: # Replace with a single Open Collective username e.g., user1

|

||||

ko_fi: canewsin

|

||||

tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

|

||||

community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

|

||||

liberapay: canewsin

|

||||

issuehunt: # Replace with a single IssueHunt username e.g., user1

|

||||

otechie: # Replace with a single Otechie username e.g., user1

|

||||

custom: ['https://paypal.me/PramUkesh', 'https://zerolink.ml/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/']

|

||||

custom: https://zerolink.ml/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/

|

||||

|

|

|

|||

72

.github/workflows/codeql-analysis.yml

vendored

72

.github/workflows/codeql-analysis.yml

vendored

|

|

@ -1,72 +0,0 @@

|

|||

# For most projects, this workflow file will not need changing; you simply need

|

||||

# to commit it to your repository.

|

||||

#

|

||||

# You may wish to alter this file to override the set of languages analyzed,

|

||||

# or to provide custom queries or build logic.

|

||||

#

|

||||

# ******** NOTE ********

|

||||

# We have attempted to detect the languages in your repository. Please check

|

||||

# the `language` matrix defined below to confirm you have the correct set of

|

||||

# supported CodeQL languages.

|

||||

#

|

||||

name: "CodeQL"

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ py3-latest ]

|

||||

pull_request:

|

||||

# The branches below must be a subset of the branches above

|

||||

branches: [ py3-latest ]

|

||||

schedule:

|

||||

- cron: '32 19 * * 2'

|

||||

|

||||

jobs:

|

||||

analyze:

|

||||

name: Analyze

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

actions: read

|

||||

contents: read

|

||||

security-events: write

|

||||

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

language: [ 'javascript', 'python' ]

|

||||

# CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

|

||||

# Learn more about CodeQL language support at https://aka.ms/codeql-docs/language-support

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v3

|

||||

|

||||

# Initializes the CodeQL tools for scanning.

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v2

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

# If you wish to specify custom queries, you can do so here or in a config file.

|

||||

# By default, queries listed here will override any specified in a config file.

|

||||

# Prefix the list here with "+" to use these queries and those in the config file.

|

||||

|

||||

# Details on CodeQL's query packs refer to : https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/configuring-code-scanning#using-queries-in-ql-packs

|

||||

# queries: security-extended,security-and-quality

|

||||

|

||||

|

||||

# Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

|

||||

# If this step fails, then you should remove it and run the build manually (see below)

|

||||

- name: Autobuild

|

||||

uses: github/codeql-action/autobuild@v2

|

||||

|

||||

# ℹ️ Command-line programs to run using the OS shell.

|

||||

# 📚 See https://docs.github.com/en/actions/using-workflows/workflow-syntax-for-github-actions#jobsjob_idstepsrun

|

||||

|

||||

# If the Autobuild fails above, remove it and uncomment the following three lines.

|

||||

# modify them (or add more) to build your code if your project, please refer to the EXAMPLE below for guidance.

|

||||

|

||||

# - run: |

|

||||

# echo "Run, Build Application using script"

|

||||

# ./location_of_script_within_repo/buildscript.sh

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v2

|

||||

71

.github/workflows/tests.yml

vendored

71

.github/workflows/tests.yml

vendored

|

|

@ -4,48 +4,49 @@ on: [push, pull_request]

|

|||

|

||||

jobs:

|

||||

test:

|

||||

runs-on: ubuntu-20.04

|

||||

|

||||

runs-on: ubuntu-18.04

|

||||

strategy:

|

||||

max-parallel: 16

|

||||

matrix:

|

||||

python-version: ["3.7", "3.8", "3.9"]

|

||||

python-version: [3.6, 3.7, 3.8, 3.9]

|

||||

|

||||

steps:

|

||||

- name: Checkout ZeroNet

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

submodules: "true"

|

||||

- name: Checkout ZeroNet

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

submodules: 'true'

|

||||

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v1

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v1

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

|

||||

- name: Prepare for installation

|

||||

run: |

|

||||

python3 -m pip install setuptools

|

||||

python3 -m pip install --upgrade pip wheel

|

||||

python3 -m pip install --upgrade codecov coveralls flake8 mock pytest==4.6.3 pytest-cov selenium

|

||||

- name: Prepare for installation

|

||||

run: |

|

||||

python3 -m pip install setuptools

|

||||

python3 -m pip install --upgrade pip wheel

|

||||

python3 -m pip install --upgrade codecov coveralls flake8 mock pytest==4.6.3 pytest-cov selenium

|

||||

|

||||

- name: Install

|

||||

run: |

|

||||

python3 -m pip install --upgrade -r requirements.txt

|

||||

python3 -m pip list

|

||||

- name: Install

|

||||

run: |

|

||||

python3 -m pip install --upgrade -r requirements.txt

|

||||

python3 -m pip list

|

||||

|

||||

- name: Prepare for tests

|

||||

run: |

|

||||

openssl version -a

|

||||

echo 0 | sudo tee /proc/sys/net/ipv6/conf/all/disable_ipv6

|

||||

- name: Prepare for tests

|

||||

run: |

|

||||

openssl version -a

|

||||

echo 0 | sudo tee /proc/sys/net/ipv6/conf/all/disable_ipv6

|

||||

|

||||

- name: Test

|

||||

run: |

|

||||

catchsegv python3 -m pytest src/Test --cov=src --cov-config src/Test/coverage.ini

|

||||

export ZERONET_LOG_DIR="log/CryptMessage"; catchsegv python3 -m pytest -x plugins/CryptMessage/Test

|

||||

export ZERONET_LOG_DIR="log/Bigfile"; catchsegv python3 -m pytest -x plugins/Bigfile/Test

|

||||

export ZERONET_LOG_DIR="log/AnnounceLocal"; catchsegv python3 -m pytest -x plugins/AnnounceLocal/Test

|

||||

export ZERONET_LOG_DIR="log/OptionalManager"; catchsegv python3 -m pytest -x plugins/OptionalManager/Test

|

||||

export ZERONET_LOG_DIR="log/Multiuser"; mv plugins/disabled-Multiuser plugins/Multiuser && catchsegv python -m pytest -x plugins/Multiuser/Test

|

||||

export ZERONET_LOG_DIR="log/Bootstrapper"; mv plugins/disabled-Bootstrapper plugins/Bootstrapper && catchsegv python -m pytest -x plugins/Bootstrapper/Test

|

||||

find src -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

find plugins -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

flake8 . --count --select=E9,F63,F72,F82 --show-source --statistics --exclude=src/lib/pyaes/

|

||||

- name: Test

|

||||

run: |

|

||||

catchsegv python3 -m pytest src/Test --cov=src --cov-config src/Test/coverage.ini

|

||||

export ZERONET_LOG_DIR="log/CryptMessage"; catchsegv python3 -m pytest -x plugins/CryptMessage/Test

|

||||

export ZERONET_LOG_DIR="log/Bigfile"; catchsegv python3 -m pytest -x plugins/Bigfile/Test

|

||||

export ZERONET_LOG_DIR="log/AnnounceLocal"; catchsegv python3 -m pytest -x plugins/AnnounceLocal/Test

|

||||

export ZERONET_LOG_DIR="log/OptionalManager"; catchsegv python3 -m pytest -x plugins/OptionalManager/Test

|

||||

export ZERONET_LOG_DIR="log/Multiuser"; mv plugins/disabled-Multiuser plugins/Multiuser && catchsegv python -m pytest -x plugins/Multiuser/Test

|

||||

export ZERONET_LOG_DIR="log/Bootstrapper"; mv plugins/disabled-Bootstrapper plugins/Bootstrapper && catchsegv python -m pytest -x plugins/Bootstrapper/Test

|

||||

find src -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

find plugins -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

flake8 . --count --select=E9,F63,F72,F82 --show-source --statistics --exclude=src/lib/pyaes/

|

||||

|

|

|

|||

1

.gitignore

vendored

1

.gitignore

vendored

|

|

@ -7,7 +7,6 @@ __pycache__/

|

|||

|

||||

# Hidden files

|

||||

.*

|

||||

!/.forgejo

|

||||

!/.github

|

||||

!/.gitignore

|

||||

!/.travis.yml

|

||||

|

|

|

|||

81

CHANGELOG.md

81

CHANGELOG.md

|

|

@ -1,85 +1,6 @@

|

|||

### ZeroNet 0.9.0 (2023-07-12) Rev4630

|

||||

- Fix RDos Issue in Plugins https://github.com/ZeroNetX/ZeroNet-Plugins/pull/9

|

||||

- Add trackers to Config.py for failsafety incase missing trackers.txt

|

||||

- Added Proxy links

|

||||

- Fix pysha3 dep installation issue

|

||||

- FileRequest -> Remove Unnecessary check, Fix error wording

|

||||

- Fix Response when site is missing for `actionAs`

|

||||

### ZeroNet 0.7.2 (2020-09-?) Rev4206?

|

||||

|

||||

|

||||

### ZeroNet 0.8.5 (2023-02-12) Rev4625

|

||||

- Fix(https://github.com/ZeroNetX/ZeroNet/pull/202) for SSL cert gen failed on Windows.

|

||||

- default theme-class for missing value in `users.json`.

|

||||

- Fetch Stats Plugin changes.

|

||||

|

||||

### ZeroNet 0.8.4 (2022-12-12) Rev4620

|

||||

- Increase Minimum Site size to 25MB.

|

||||

|

||||

### ZeroNet 0.8.3 (2022-12-11) Rev4611

|

||||

- main.py -> Fix accessing unassigned varible

|

||||

- ContentManager -> Support for multiSig

|

||||

- SiteStrorage.py -> Fix accessing unassigned varible

|

||||

- ContentManager.py Improve Logging of Valid Signers

|

||||

|

||||

### ZeroNet 0.8.2 (2022-11-01) Rev4610

|

||||

- Fix Startup Error when plugins dir missing

|

||||

- Move trackers to seperate file & Add more trackers

|

||||

- Config:: Skip loading missing tracker files

|

||||

- Added documentation for getRandomPort fn

|

||||

|

||||

### ZeroNet 0.8.1 (2022-10-01) Rev4600

|

||||

- fix readdress loop (cherry-pick previously added commit from conservancy)

|

||||

- Remove Patreon badge

|

||||

- Update README-ru.md (#177)

|

||||

- Include inner_path of failed request for signing in error msg and response

|

||||

- Don't Fail Silently When Cert is Not Selected

|

||||

- Console Log Updates, Specify min supported ZeroNet version for Rust version Protocol Compatibility

|

||||

- Update FUNDING.yml

|

||||

|

||||

### ZeroNet 0.8.0 (2022-05-27) Rev4591

|

||||

- Revert File Open to catch File Access Errors.

|

||||

|

||||

### ZeroNet 0.7.9-patch (2022-05-26) Rev4586

|

||||

- Use xescape(s) from zeronet-conservancy

|

||||

- actionUpdate response Optimisation

|

||||

- Fetch Plugins Repo Updates

|

||||

- Fix Unhandled File Access Errors

|

||||

- Create codeql-analysis.yml

|

||||

|

||||

### ZeroNet 0.7.9 (2022-05-26) Rev4585

|

||||

- Rust Version Compatibility for update Protocol msg

|

||||

- Removed Non Working Trakers.

|

||||

- Dynamically Load Trackers from Dashboard Site.

|

||||

- Tracker Supply Improvements.

|

||||

- Fix Repo Url for Bug Report

|

||||

- First Party Tracker Update Service using Dashboard Site.

|

||||

- remove old v2 onion service [#158](https://github.com/ZeroNetX/ZeroNet/pull/158)

|

||||

|

||||

### ZeroNet 0.7.8 (2022-03-02) Rev4580

|

||||

- Update Plugins with some bug fixes and Improvements

|

||||

|

||||

### ZeroNet 0.7.6 (2022-01-12) Rev4565

|

||||

- Sync Plugin Updates

|

||||

- Clean up tor v3 patch [#115](https://github.com/ZeroNetX/ZeroNet/pull/115)

|

||||

- Add More Default Plugins to Repo

|

||||

- Doubled Site Publish Limits

|

||||

- Update ZeroNet Repo Urls [#103](https://github.com/ZeroNetX/ZeroNet/pull/103)

|

||||

- UI/UX: Increases Size of Notifications Close Button [#106](https://github.com/ZeroNetX/ZeroNet/pull/106)

|

||||

- Moved Plugins to Seperate Repo

|

||||

- Added `access_key` variable in Config, this used to access restrited plugins when multiuser plugin is enabled. When MultiUserPlugin is enabled we cannot access some pages like /Stats, this key will remove such restriction with access key.

|

||||

- Added `last_connection_id_current_version` to ConnectionServer, helpful to estimate no of connection from current client version.

|

||||

- Added current version: connections to /Stats page. see the previous point.

|

||||

|

||||

### ZeroNet 0.7.5 (2021-11-28) Rev4560

|

||||

- Add more default trackers

|

||||

- Change default homepage address to `1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`

|

||||

- Change default update site address to `1Update8crprmciJHwp2WXqkx2c4iYp18`

|

||||

|

||||

### ZeroNet 0.7.3 (2021-11-28) Rev4555

|

||||

- Fix xrange is undefined error

|

||||

- Fix Incorrect viewport on mobile while loading

|

||||

- Tor-V3 Patch by anonymoose

|

||||

|

||||

|

||||

### ZeroNet 0.7.1 (2019-07-01) Rev4206

|

||||

### Added

|

||||

|

|

|

|||

243

README-ru.md

243

README-ru.md

|

|

@ -3,131 +3,206 @@

|

|||

[简体中文](./README-zh-cn.md)

|

||||

[English](./README.md)

|

||||

|

||||

Децентрализованные вебсайты, использующие криптографию Bitcoin и протокол BitTorrent — https://zeronet.dev ([Зеркало в ZeroNet](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/)). В отличии от Bitcoin, ZeroNet'у не требуется блокчейн для работы, однако он использует ту же криптографию, чтобы обеспечить сохранность и проверку данных.

|

||||

Децентрализованные вебсайты использующие Bitcoin криптографию и BitTorrent сеть - https://zeronet.dev

|

||||

|

||||

|

||||

## Зачем?

|

||||

|

||||

- Мы верим в открытую, свободную, и неподдающуюся цензуре сеть и связь.

|

||||

- Нет единой точки отказа: Сайт остаётся онлайн, пока его обслуживает хотя бы 1 пир.

|

||||

- Нет затрат на хостинг: Сайты обслуживаются посетителями.

|

||||

- Невозможно отключить: Он нигде, потому что он везде.

|

||||

- Скорость и возможность работать без Интернета: Вы сможете получить доступ к сайту, потому что его копия хранится на вашем компьютере и у ваших пиров.

|

||||

* Мы верим в открытую, свободную, и не отцензуренную сеть и коммуникацию.

|

||||

* Нет единой точки отказа: Сайт онлайн пока по крайней мере 1 пир обслуживает его.

|

||||

* Никаких затрат на хостинг: Сайты обслуживаются посетителями.

|

||||

* Невозможно отключить: Он нигде, потому что он везде.

|

||||

* Быстр и работает оффлайн: Вы можете получить доступ к сайту, даже если Интернет недоступен.

|

||||

|

||||

|

||||

## Особенности

|

||||

* Обновляемые в реальном времени сайты

|

||||

* Поддержка Namecoin .bit доменов

|

||||

* Лёгок в установке: распаковал & запустил

|

||||

* Клонирование вебсайтов в один клик

|

||||

* Password-less [BIP32](https://github.com/bitcoin/bips/blob/master/bip-0032.mediawiki)

|

||||

based authorization: Ваша учетная запись защищена той же криптографией, что и ваш Bitcoin-кошелек

|

||||

* Встроенный SQL-сервер с синхронизацией данных P2P: Позволяет упростить разработку сайта и ускорить загрузку страницы

|

||||

* Анонимность: Полная поддержка сети Tor с помощью скрытых служб .onion вместо адресов IPv4

|

||||

* TLS зашифрованные связи

|

||||

* Автоматическое открытие uPnP порта

|

||||

* Плагин для поддержки многопользовательской (openproxy)

|

||||

* Работает с любыми браузерами и операционными системами

|

||||

|

||||

- Обновление сайтов в реальном времени

|

||||

- Поддержка доменов `.bit` ([Namecoin](https://www.namecoin.org))

|

||||

- Легкая установка: просто распакуйте и запустите

|

||||

- Клонирование сайтов "в один клик"

|

||||

- Беспарольная [BIP32](https://github.com/bitcoin/bips/blob/master/bip-0032.mediawiki)

|

||||

авторизация: Ваша учетная запись защищена той же криптографией, что и ваш Bitcoin-кошелек

|

||||

- Встроенный SQL-сервер с синхронизацией данных P2P: Позволяет упростить разработку сайта и ускорить загрузку страницы

|

||||

- Анонимность: Полная поддержка сети Tor, используя скрытые службы `.onion` вместо адресов IPv4

|

||||

- Зашифрованное TLS подключение

|

||||

- Автоматическое открытие UPnP–порта

|

||||

- Плагин для поддержки нескольких пользователей (openproxy)

|

||||

- Работа с любыми браузерами и операционными системами

|

||||

|

||||

## Текущие ограничения

|

||||

|

||||

- Файловые транзакции не сжаты

|

||||

- Нет приватных сайтов

|

||||

|

||||

## Как это работает?

|

||||

|

||||

- После запуска `zeronet.py` вы сможете посещать сайты в ZeroNet, используя адрес

|

||||

`http://127.0.0.1:43110/{zeronet_адрес}`

|

||||

(Например: `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

|

||||

- Когда вы посещаете новый сайт в ZeroNet, он пытается найти пиров с помощью протокола BitTorrent,

|

||||

чтобы скачать у них файлы сайта (HTML, CSS, JS и т.д.).

|

||||

- После посещения сайта вы тоже становитесь его пиром.

|

||||

- Каждый сайт содержит файл `content.json`, который содержит SHA512 хеши всех остальные файлы

|

||||

и подпись, созданную с помощью закрытого ключа сайта.

|

||||

- Если владелец сайта (тот, кто владеет закрытым ключом для адреса сайта) изменяет сайт, он

|

||||

* После запуска `zeronet.py` вы сможете посетить зайты (zeronet сайты) используя адрес

|

||||

`http://127.0.0.1:43110/{zeronet_address}`

|

||||

(например. `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

|

||||

* Когда вы посещаете новый сайт zeronet, он пытается найти пиров с помощью BitTorrent

|

||||

чтобы загрузить файлы сайтов (html, css, js ...) из них.

|

||||

* Каждый посещенный зайт также обслуживается вами. (Т.е хранится у вас на компьютере)

|

||||

* Каждый сайт содержит файл `content.json`, который содержит все остальные файлы в хэше sha512

|

||||

и подпись, созданную с использованием частного ключа сайта.

|

||||

* Если владелец сайта (у которого есть закрытый ключ для адреса сайта) изменяет сайт, то он/она

|

||||

подписывает новый `content.json` и публикует его для пиров. После этого пиры проверяют целостность `content.json`

|

||||

(используя подпись), скачвают изменённые файлы и распространяют новый контент для других пиров.

|

||||

(используя подпись), они загружают измененные файлы и публикуют новый контент для других пиров.

|

||||

|

||||

#### [Слайд-шоу о криптографии ZeroNet, обновлениях сайтов, многопользовательских сайтах »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

|

||||

#### [Часто задаваемые вопросы »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

|

||||

|

||||

#### [Документация разработчика ZeroNet »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

[Презентация о криптографии ZeroNet, обновлениях сайтов, многопользовательских сайтах »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

|

||||

[Часто задаваемые вопросы »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

|

||||

[Документация разработчика ZeroNet »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

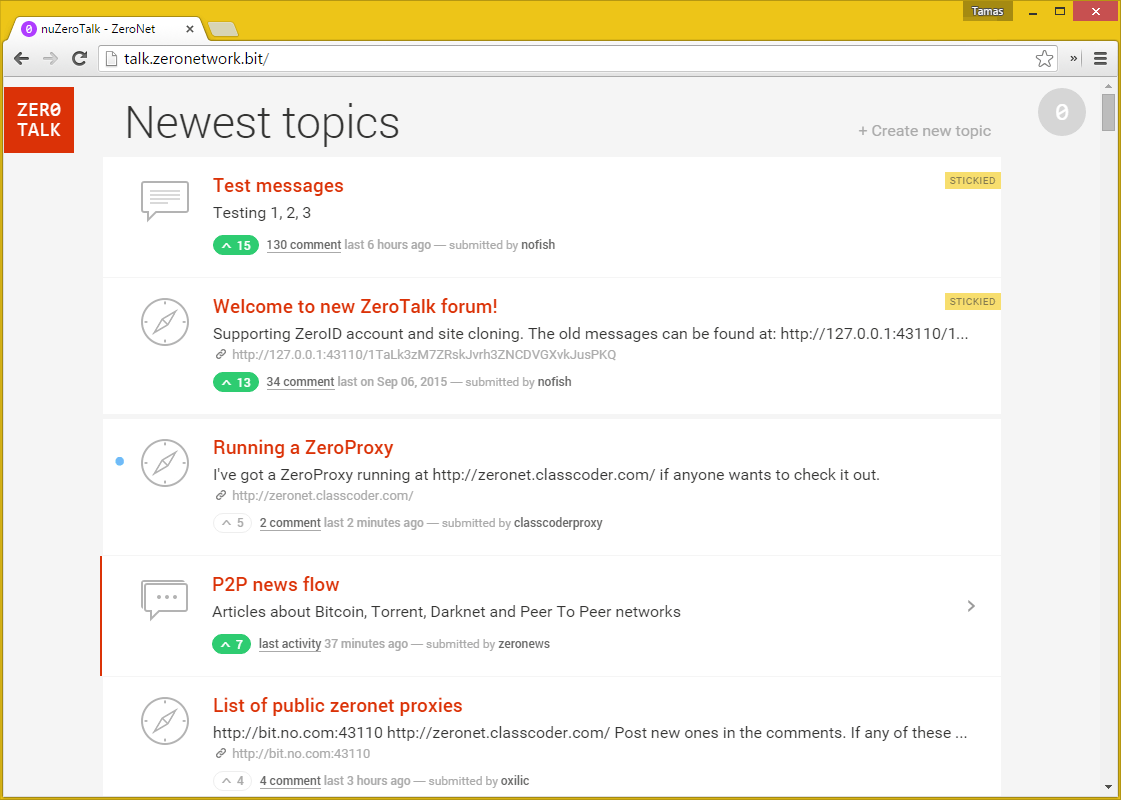

## Скриншоты

|

||||

|

||||

|

||||

|

||||

[Больше скриншотов в документации ZeroNet »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

|

||||

|

||||

## Как присоединиться?

|

||||

#### [Больше скриншотов в ZeroNet документации »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

|

||||

|

||||

### Windows

|

||||

|

||||

- Скачайте и распакуйте архив [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26МБ)

|

||||

- Запустите `ZeroNet.exe`

|

||||

## Как вступить

|

||||

|

||||

### macOS

|

||||

* Скачайте ZeroBundle пакет:

|

||||

* [Microsoft Windows](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip)

|

||||

* [Apple macOS](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip)

|

||||

* [Linux 64-bit](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip)

|

||||

* [Linux 32-bit](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip)

|

||||

* Распакуйте где угодно

|

||||

* Запустите `ZeroNet.exe` (win), `ZeroNet(.app)` (osx), `ZeroNet.sh` (linux)

|

||||

|

||||

- Скачайте и распакуйте архив [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14МБ)

|

||||

- Запустите `ZeroNet.app`

|

||||

### Linux терминал

|

||||

|

||||

### Linux (64 бит)

|

||||

* `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

|

||||

* `unzip ZeroNet-linux.zip`

|

||||

* `cd ZeroNet-linux`

|

||||

* Запустите с помощью `./ZeroNet.sh`

|

||||

|

||||

- Скачайте и распакуйте архив [ZeroNet-linux.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip) (14МБ)

|

||||

- Запустите `./ZeroNet.sh`

|

||||

Он загружает последнюю версию ZeroNet, затем запускает её автоматически.

|

||||

|

||||

> **Note**

|

||||

> Запустите таким образом: `./ZeroNet.sh --ui_ip '*' --ui_restrict ваш_ip_адрес`, чтобы разрешить удалённое подключение к веб–интерфейсу.

|

||||

#### Ручная установка для Debian Linux

|

||||

|

||||

### Docker

|

||||

* `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

|

||||

* `unzip ZeroNet-src.zip`

|

||||

* `cd ZeroNet`

|

||||

* `sudo apt-get update`

|

||||

* `sudo apt-get install python3-pip`

|

||||

* `sudo python3 -m pip install -r requirements.txt`

|

||||

* Запустите с помощью `python3 zeronet.py`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

Официальный образ находится здесь: https://hub.docker.com/r/canewsin/zeronet/

|

||||

### [Arch Linux](https://www.archlinux.org)

|

||||

|

||||

### Android (arm, arm64, x86)

|

||||

* `git clone https://aur.archlinux.org/zeronet.git`

|

||||

* `cd zeronet`

|

||||

* `makepkg -srci`

|

||||

* `systemctl start zeronet`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

- Для работы требуется Android как минимум версии 5.0 Lollipop

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

|

||||

- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

|

||||

Смотрите [ArchWiki](https://wiki.archlinux.org)'s [ZeroNet

|

||||

article](https://wiki.archlinux.org/index.php/ZeroNet) для дальнейшей помощи.

|

||||

|

||||

### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

|

||||

### [Gentoo Linux](https://www.gentoo.org)

|

||||

|

||||

- Для работы требуется Android как минимум версии 4.1 Jelly Bean

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

|

||||

* [`layman -a raiagent`](https://github.com/leycec/raiagent)

|

||||

* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

|

||||

* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

|

||||

/etc/portage/package.use`

|

||||

* `emerge zeronet`

|

||||

* `rc-service zeronet start`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### Установка из исходного кода

|

||||

Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

|

||||

|

||||

```sh

|

||||

wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip

|

||||

unzip ZeroNet-src.zip

|

||||

cd ZeroNet

|

||||

sudo apt-get update

|

||||

sudo apt-get install python3-pip

|

||||

sudo python3 -m pip install -r requirements.txt

|

||||

### [FreeBSD](https://www.freebsd.org/)

|

||||

|

||||

* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

|

||||

* `sysrc zeronet_enable="YES"`

|

||||

* `service zeronet start`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### [Vagrant](https://www.vagrantup.com/)

|

||||

|

||||

* `vagrant up`

|

||||

* Подключитесь к VM с помощью `vagrant ssh`

|

||||

* `cd /vagrant`

|

||||

* Запустите `python3 zeronet.py --ui_ip 0.0.0.0`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### [Docker](https://www.docker.com/)

|

||||

* `docker run -d -v <local_data_folder>:/root/data -p 15441:15441 -p 127.0.0.1:43110:43110 canewsin/zeronet`

|

||||

* Это изображение Docker включает в себя прокси-сервер Tor, который по умолчанию отключён.

|

||||

Остерегайтесь что некоторые хостинг-провайдеры могут не позволить вам запускать Tor на своих серверах.

|

||||

Если вы хотите включить его,установите переменную среды `ENABLE_TOR` в` true` (по умолчанию: `false`) Например:

|

||||

|

||||

`docker run -d -e "ENABLE_TOR=true" -v <local_data_folder>:/root/data -p 15441:15441 -p 127.0.0.1:43110:43110 canewsin/zeronet`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### [Virtualenv](https://virtualenv.readthedocs.org/en/latest/)

|

||||

|

||||

* `virtualenv env`

|

||||

* `source env/bin/activate`

|

||||

* `pip install msgpack gevent`

|

||||

* `python3 zeronet.py`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

## Текущие ограничения

|

||||

|

||||

* Файловые транзакции не сжаты

|

||||

* Нет приватных сайтов

|

||||

|

||||

|

||||

## Как я могу создать сайт в Zeronet?

|

||||

|

||||

Завершите работу zeronet, если он запущен

|

||||

|

||||

```bash

|

||||

$ zeronet.py siteCreate

|

||||

...

|

||||

- Site private key (Приватный ключ сайта): 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

|

||||

- Site address (Адрес сайта): 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

|

||||

...

|

||||

- Site created! (Сайт создан)

|

||||

$ zeronet.py

|

||||

...

|

||||

```

|

||||

- Запустите `python3 zeronet.py`

|

||||

|

||||

Откройте приветственную страницу ZeroHello в вашем браузере по ссылке http://127.0.0.1:43110/

|

||||

Поздравляем, вы закончили! Теперь каждый может получить доступ к вашему зайту используя

|

||||

`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

|

||||

|

||||

## Как мне создать сайт в ZeroNet?

|

||||

Следующие шаги: [ZeroNet Developer Documentation](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

- Кликните на **⋮** > **"Create new, empty site"** в меню на сайте [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d).

|

||||

- Вы будете **перенаправлены** на совершенно новый сайт, который может быть изменён только вами!

|

||||

- Вы можете найти и изменить контент вашего сайта в каталоге **data/[адрес_вашего_сайта]**

|

||||

- После изменений откройте ваш сайт, переключите влево кнопку "0" в правом верхнем углу, затем нажмите кнопки **sign** и **publish** внизу

|

||||

|

||||

Следующие шаги: [Документация разработчика ZeroNet](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

## Как я могу модифицировать Zeronet сайт?

|

||||

|

||||

* Измените файлы расположенные в data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 директории.

|

||||

Когда закончите с изменением:

|

||||

|

||||

```bash

|

||||

$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

|

||||

- Signing site (Подпись сайта): 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

|

||||

Private key (Приватный ключ) (input hidden):

|

||||

```

|

||||

|

||||

* Введите секретный ключ, который вы получили при создании сайта, потом:

|

||||

|

||||

```bash

|

||||

$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

|

||||

...

|

||||

Site:13DNDk..bhC2 Publishing to 3/10 peers...

|

||||

Site:13DNDk..bhC2 Successfuly published to 3 peers

|

||||

- Serving files....

|

||||

```

|

||||

|

||||

* Вот и всё! Вы успешно подписали и опубликовали свои изменения.

|

||||

|

||||

|

||||

## Поддержите проект

|

||||

|

||||

- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Рекомендуем)

|

||||

- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

|

||||

- LiberaPay: https://liberapay.com/PramUkesh

|

||||

- Paypal: https://paypal.me/PramUkesh

|

||||

- Другие способы: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

|

||||

- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

|

||||

|

||||

|

||||

#### Спасибо!

|

||||

|

||||

- Здесь вы можете получить больше информации, помощь, прочитать список изменений и исследовать ZeroNet сайты: https://www.reddit.com/r/zeronetx/

|

||||

- Общение происходит на канале [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) или в [Gitter](https://gitter.im/canewsin/ZeroNet)

|

||||

- Электронная почта: canews.in@gmail.com

|

||||

* Больше информации, помощь, журнал изменений, zeronet сайты: https://www.reddit.com/r/zeronetx/

|

||||

* Приходите, пообщайтесь с нами: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) или на [gitter](https://gitter.im/canewsin/ZeroNet)

|

||||

* Email: canews.in@gmail.com

|

||||

|

|

|

|||

19

README.md

19

README.md

|

|

@ -1,4 +1,5 @@

|

|||

# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

|

||||

|

||||

<!--TODO: Update Onion Site -->

|

||||

Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

|

||||

|

||||

|

|

@ -99,24 +100,6 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

|

|||

#### Docker

|

||||

There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

|

||||

|

||||

### Online Proxies

|

||||

Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

|

||||

|

||||

#### Official ZNX Proxy :

|

||||

|

||||

https://proxy.zeronet.dev/

|

||||

|

||||

https://zeronet.dev/

|

||||

|

||||

#### From Community

|

||||

|

||||

https://0net-preview.com/

|

||||

|

||||

https://portal.ngnoid.tv/

|

||||

|

||||

https://zeronet.ipfsscan.io/

|

||||

|

||||

|

||||

### Install from source

|

||||

|

||||

- `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

|

||||

|

|

|

|||

32

build-docker-images.sh

Executable file

32

build-docker-images.sh

Executable file

|

|

@ -0,0 +1,32 @@

|

|||

#!/bin/sh

|

||||

set -e

|

||||

|

||||

arg_push=

|

||||

|

||||

case "$1" in

|

||||

--push) arg_push=y ; shift ;;

|

||||

esac

|

||||

|

||||

default_suffix=alpine

|

||||

prefix="${1:-local/}"

|

||||

|

||||

for dokerfile in dockerfiles/Dockerfile.* ; do

|

||||

suffix="`echo "$dokerfile" | sed 's/.*\/Dockerfile\.//'`"

|

||||

image_name="${prefix}zeronet:$suffix"

|

||||

|

||||

latest=""

|

||||

t_latest=""

|

||||

if [ "$suffix" = "$default_suffix" ] ; then

|

||||

latest="${prefix}zeronet:latest"

|

||||

t_latest="-t ${latest}"

|

||||

fi

|

||||

|

||||

echo "DOCKER BUILD $image_name"

|

||||

docker build -f "$dokerfile" -t "$image_name" $t_latest .

|

||||

if [ -n "$arg_push" ] ; then

|

||||

docker push "$image_name"

|

||||

if [ -n "$latest" ] ; then

|

||||

docker push "$latest"

|

||||

fi

|

||||

fi

|

||||

done

|

||||

1

dockerfiles/Dockerfile.alpine

Symbolic link

1

dockerfiles/Dockerfile.alpine

Symbolic link

|

|

@ -0,0 +1 @@

|

|||

Dockerfile.alpine3.13

|

||||

44

dockerfiles/Dockerfile.alpine3.13

Normal file

44

dockerfiles/Dockerfile.alpine3.13

Normal file

|

|

@ -0,0 +1,44 @@

|

|||

# THIS FILE IS AUTOGENERATED BY gen-dockerfiles.sh.

|

||||

# SEE zeronet-Dockerfile FOR THE SOURCE FILE.

|

||||

|

||||

FROM alpine:3.13

|

||||

|

||||

# Base settings

|

||||

ENV HOME /root

|

||||

|

||||

# Install packages

|

||||

|

||||

# Install packages

|

||||

|

||||

COPY install-dep-packages.sh /root/install-dep-packages.sh

|

||||

|

||||

RUN /root/install-dep-packages.sh install

|

||||

|

||||

COPY requirements.txt /root/requirements.txt

|

||||

|

||||

RUN pip3 install -r /root/requirements.txt \

|

||||

&& /root/install-dep-packages.sh remove-makedeps \

|

||||

&& echo "ControlPort 9051" >> /etc/tor/torrc \

|

||||

&& echo "CookieAuthentication 1" >> /etc/tor/torrc

|

||||

|

||||

RUN python3 -V \

|

||||

&& python3 -m pip list \

|

||||

&& tor --version \

|

||||

&& openssl version

|

||||

|

||||

# Add Zeronet source

|

||||

|

||||

COPY . /root

|

||||

VOLUME /root/data

|

||||

|

||||

# Control if Tor proxy is started

|

||||

ENV ENABLE_TOR false

|

||||

|

||||

WORKDIR /root

|

||||

|

||||

# Set upstart command

|

||||

CMD (! ${ENABLE_TOR} || tor&) && python3 zeronet.py --ui_ip 0.0.0.0 --fileserver_port 26552

|

||||

|

||||

# Expose ports

|

||||

EXPOSE 43110 26552

|

||||

|

||||

1

dockerfiles/Dockerfile.ubuntu

Symbolic link

1

dockerfiles/Dockerfile.ubuntu

Symbolic link

|

|

@ -0,0 +1 @@

|

|||

Dockerfile.ubuntu20.04

|

||||

44

dockerfiles/Dockerfile.ubuntu20.04

Normal file

44

dockerfiles/Dockerfile.ubuntu20.04

Normal file

|

|

@ -0,0 +1,44 @@

|

|||

# THIS FILE IS AUTOGENERATED BY gen-dockerfiles.sh.

|

||||

# SEE zeronet-Dockerfile FOR THE SOURCE FILE.

|

||||

|

||||

FROM ubuntu:20.04

|

||||

|

||||

# Base settings

|

||||

ENV HOME /root

|

||||

|

||||

# Install packages

|

||||

|

||||

# Install packages

|

||||

|

||||

COPY install-dep-packages.sh /root/install-dep-packages.sh

|

||||

|

||||

RUN /root/install-dep-packages.sh install

|

||||

|

||||

COPY requirements.txt /root/requirements.txt

|

||||

|

||||

RUN pip3 install -r /root/requirements.txt \

|

||||

&& /root/install-dep-packages.sh remove-makedeps \

|

||||

&& echo "ControlPort 9051" >> /etc/tor/torrc \

|

||||

&& echo "CookieAuthentication 1" >> /etc/tor/torrc

|

||||

|

||||

RUN python3 -V \

|

||||

&& python3 -m pip list \

|

||||

&& tor --version \

|

||||

&& openssl version

|

||||

|

||||

# Add Zeronet source

|

||||

|

||||

COPY . /root

|

||||

VOLUME /root/data

|

||||

|

||||

# Control if Tor proxy is started

|

||||

ENV ENABLE_TOR false

|

||||

|

||||

WORKDIR /root

|

||||

|

||||

# Set upstart command

|

||||

CMD (! ${ENABLE_TOR} || tor&) && python3 zeronet.py --ui_ip 0.0.0.0 --fileserver_port 26552

|

||||

|

||||

# Expose ports

|

||||

EXPOSE 43110 26552

|

||||

|

||||

34

dockerfiles/gen-dockerfiles.sh

Executable file

34

dockerfiles/gen-dockerfiles.sh

Executable file

|

|

@ -0,0 +1,34 @@

|

|||

#!/bin/sh

|

||||

|

||||

set -e

|

||||

|

||||

die() {

|

||||

echo "$@" > /dev/stderr

|

||||

exit 1

|

||||

}

|

||||

|

||||

for os in alpine:3.13 ubuntu:20.04 ; do

|

||||

prefix="`echo "$os" | sed -e 's/://'`"

|

||||

short_prefix="`echo "$os" | sed -e 's/:.*//'`"

|

||||

|

||||

zeronet="zeronet-Dockerfile"

|

||||

|

||||

dockerfile="Dockerfile.$prefix"

|

||||

dockerfile_short="Dockerfile.$short_prefix"

|

||||

|

||||

echo "GEN $dockerfile"

|

||||

|

||||

if ! test -f "$zeronet" ; then

|

||||

die "No such file: $zeronet"

|

||||

fi

|

||||

|

||||

echo "\

|

||||

# THIS FILE IS AUTOGENERATED BY gen-dockerfiles.sh.

|

||||

# SEE $zeronet FOR THE SOURCE FILE.

|

||||

|

||||

FROM $os

|

||||

|

||||

`cat "$zeronet"`

|

||||

" > "$dockerfile.tmp" && mv "$dockerfile.tmp" "$dockerfile" && ln -s -f "$dockerfile" "$dockerfile_short"

|

||||

done

|

||||

|

||||

|

|

@ -1,14 +1,16 @@

|

|||

FROM alpine:3.12

|

||||

|

||||

#Base settings

|

||||

# Base settings

|

||||

ENV HOME /root

|

||||

|

||||

# Install packages

|

||||

|

||||

COPY install-dep-packages.sh /root/install-dep-packages.sh

|

||||

|

||||

RUN /root/install-dep-packages.sh install

|

||||

|

||||

COPY requirements.txt /root/requirements.txt

|

||||

|

||||

#Install ZeroNet

|

||||

RUN apk --update --no-cache --no-progress add python3 python3-dev gcc libffi-dev musl-dev make tor openssl \

|

||||

&& pip3 install -r /root/requirements.txt \

|

||||

&& apk del python3-dev gcc libffi-dev musl-dev make \

|

||||

RUN pip3 install -r /root/requirements.txt \

|

||||

&& /root/install-dep-packages.sh remove-makedeps \

|

||||

&& echo "ControlPort 9051" >> /etc/tor/torrc \

|

||||

&& echo "CookieAuthentication 1" >> /etc/tor/torrc

|

||||

|

||||

|

|

@ -17,18 +19,18 @@ RUN python3 -V \

|

|||

&& tor --version \

|

||||

&& openssl version

|

||||

|

||||

#Add Zeronet source

|

||||

# Add Zeronet source

|

||||

|

||||

COPY . /root

|

||||

VOLUME /root/data

|

||||

|

||||

#Control if Tor proxy is started

|

||||

# Control if Tor proxy is started

|

||||

ENV ENABLE_TOR false

|

||||

|

||||

WORKDIR /root

|

||||

|

||||

#Set upstart command

|

||||

# Set upstart command

|

||||

CMD (! ${ENABLE_TOR} || tor&) && python3 zeronet.py --ui_ip 0.0.0.0 --fileserver_port 26552

|

||||

|

||||

#Expose ports

|

||||

# Expose ports

|

||||

EXPOSE 43110 26552

|

||||

|

||||

49

install-dep-packages.sh

Executable file

49

install-dep-packages.sh

Executable file

|

|

@ -0,0 +1,49 @@

|

|||

#!/bin/sh

|

||||

set -e

|

||||

|

||||

do_alpine() {

|

||||

local deps="python3 py3-pip openssl tor"

|

||||

local makedeps="python3-dev gcc g++ libffi-dev musl-dev make automake autoconf libtool"

|

||||

|

||||

case "$1" in

|

||||

install)

|

||||

apk --update --no-cache --no-progress add $deps $makedeps

|

||||

;;

|

||||

remove-makedeps)

|

||||

apk del $makedeps

|

||||

;;

|

||||

esac

|

||||

}

|

||||

|

||||

do_ubuntu() {

|

||||

local deps="python3 python3-pip openssl tor"

|

||||

local makedeps="python3-dev gcc g++ libffi-dev make automake autoconf libtool"

|

||||

|

||||

case "$1" in

|

||||

install)

|

||||

apt-get update && \

|

||||

apt-get install --no-install-recommends -y $deps $makedeps && \

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

;;

|

||||

remove-makedeps)

|

||||

apt-get remove -y $makedeps

|

||||

;;

|

||||

esac

|

||||

}

|

||||

|

||||

if test -f /etc/os-release ; then

|

||||

. /etc/os-release

|

||||

elif test -f /usr/lib/os-release ; then

|

||||

. /usr/lib/os-release

|

||||

else

|

||||

echo "No such file: /etc/os-release" > /dev/stderr

|

||||

exit 1

|

||||

fi

|

||||

|

||||

case "$ID" in

|

||||

ubuntu) do_ubuntu "$@" ;;

|

||||

alpine) do_alpine "$@" ;;

|

||||

*)

|

||||

echo "Unsupported OS ID: $ID" > /dev/stderr

|

||||

exit 1

|

||||

esac

|

||||

1

plugins

1

plugins

|

|

@ -1 +0,0 @@

|

|||

Subproject commit 689d9309f73371f4681191b125ec3f2e14075eeb

|

||||

|

|

@ -3,7 +3,7 @@ greenlet==0.4.16; python_version <= "3.6"

|

|||

gevent>=20.9.0; python_version >= "3.7"

|

||||

msgpack>=0.4.4

|

||||

base58

|

||||

merkletools @ git+https://github.com/ZeroNetX/pymerkletools.git@dev

|

||||

merkletools

|

||||

rsa

|

||||

PySocks>=1.6.8

|

||||

pyasn1

|

||||

|

|

|

|||

|

|

@ -13,8 +13,8 @@ import time

|

|||

class Config(object):

|

||||

|

||||

def __init__(self, argv):

|

||||

self.version = "0.9.0"

|

||||

self.rev = 4630

|

||||

self.version = "0.7.6"

|

||||

self.rev = 4565

|

||||

self.argv = argv

|

||||

self.action = None

|

||||

self.test_parser = None

|

||||

|

|

@ -82,12 +82,45 @@ class Config(object):

|

|||

from Crypt import CryptHash

|

||||

access_key_default = CryptHash.random(24, "base64") # Used to allow restrited plugins when multiuser plugin is enabled

|

||||

trackers = [

|

||||

# by zeroseed at http://127.0.0.1:43110/19HKdTAeBh5nRiKn791czY7TwRB1QNrf1Q/?:users/1HvNGwHKqhj3ZMEM53tz6jbdqe4LRpanEu:zn:dc17f896-bf3f-4962-bdd4-0a470040c9c5

|

||||

"zero://k5w77dozo3hy5zualyhni6vrh73iwfkaofa64abbilwyhhd3wgenbjqd.onion:15441",

|

||||

"zero://2kcb2fqesyaevc4lntogupa4mkdssth2ypfwczd2ov5a3zo6ytwwbayd.onion:15441",

|

||||

"zero://my562dxpjropcd5hy3nd5pemsc4aavbiptci5amwxzbelmzgkkuxpvid.onion:15441",

|

||||

"zero://pn4q2zzt2pw4nk7yidxvsxmydko7dfibuzxdswi6gu6ninjpofvqs2id.onion:15441",

|

||||

"zero://6i54dd5th73oelv636ivix6sjnwfgk2qsltnyvswagwphub375t3xcad.onion:15441",

|

||||

"zero://tl74auz4tyqv4bieeclmyoe4uwtoc2dj7fdqv4nc4gl5j2bwg2r26bqd.onion:15441",

|

||||

"zero://wlxav3szbrdhest4j7dib2vgbrd7uj7u7rnuzg22cxbih7yxyg2hsmid.onion:15441",

|

||||

"zero://zy7wttvjtsijt5uwmlar4yguvjc2gppzbdj4v6bujng6xwjmkdg7uvqd.onion:15441",

|

||||

|

||||

# ZeroNet 0.7.2 defaults:

|

||||

"zero://boot3rdez4rzn36x.onion:15441",

|

||||

"http://open.acgnxtracker.com:80/announce", # DE

|

||||

"http://tracker.bt4g.com:2095/announce", # Cloudflare

|

||||

"http://tracker.files.fm:6969/announce",

|

||||

"http://t.publictracker.xyz:6969/announce",

|

||||

"zero://2602:ffc5::c5b2:5360:26312", # US/ATL

|

||||

"zero://145.239.95.38:15441",

|

||||

"zero://188.116.183.41:26552",

|

||||

"zero://145.239.95.38:15441",

|

||||

"zero://211.125.90.79:22234",

|

||||

"zero://216.189.144.82:26312",

|

||||

"zero://45.77.23.92:15555",

|

||||

"zero://51.15.54.182:21041",

|

||||

"https://tracker.lilithraws.cf:443/announce",

|

||||

"https://tracker.babico.name.tr:443/announce",

|

||||

"udp://code2chicken.nl:6969/announce",

|

||||

"udp://abufinzio.monocul.us:6969/announce",

|

||||

"udp://tracker.0x.tf:6969/announce",

|

||||

"udp://tracker.zerobytes.xyz:1337/announce",

|

||||

"udp://vibe.sleepyinternetfun.xyz:1738/announce",

|

||||

"udp://www.torrent.eu.org:451/announce",

|

||||

"zero://k5w77dozo3hy5zualyhni6vrh73iwfkaofa64abbilwyhhd3wgenbjqd.onion:15441",

|

||||

"zero://2kcb2fqesyaevc4lntogupa4mkdssth2ypfwczd2ov5a3zo6ytwwbayd.onion:15441",

|

||||

"zero://gugt43coc5tkyrhrc3esf6t6aeycvcqzw7qafxrjpqbwt4ssz5czgzyd.onion:15441",

|

||||

"zero://hb6ozikfiaafeuqvgseiik4r46szbpjfu66l67wjinnyv6dtopuwhtqd.onion:15445",

|

||||

"zero://75pmmcbp4vvo2zndmjnrkandvbg6jyptygvvpwsf2zguj7urq7t4jzyd.onion:7777",

|

||||

"zero://dw4f4sckg2ultdj5qu7vtkf3jsfxsah3mz6pivwfd6nv3quji3vfvhyd.onion:6969",

|

||||

"zero://5vczpwawviukvd7grfhsfxp7a6huz77hlis4fstjkym5kmf4pu7i7myd.onion:15441",

|

||||

"zero://ow7in4ftwsix5klcbdfqvfqjvimqshbm2o75rhtpdnsderrcbx74wbad.onion:15441",

|

||||

"zero://agufghdtniyfwty3wk55drxxwj2zxgzzo7dbrtje73gmvcpxy4ngs4ad.onion:15441",

|

||||

"zero://qn65si4gtcwdiliq7vzrwu62qrweoxb6tx2cchwslaervj6szuje66qd.onion:26117",

|

||||

]

|

||||

# Platform specific

|

||||

if sys.platform.startswith("win"):

|

||||

|

|

@ -251,12 +284,31 @@ class Config(object):

|

|||

self.parser.add_argument('--access_key', help='Plugin access key default: Random key generated at startup', default=access_key_default, metavar='key')

|

||||

self.parser.add_argument('--dist_type', help='Type of installed distribution', default='source')

|

||||

|

||||

self.parser.add_argument('--size_limit', help='Default site size limit in MB', default=25, type=int, metavar='limit')

|

||||

self.parser.add_argument('--size_limit', help='Default site size limit in MB', default=10, type=int, metavar='limit')

|

||||

self.parser.add_argument('--file_size_limit', help='Maximum per file size limit in MB', default=10, type=int, metavar='limit')

|

||||

self.parser.add_argument('--connected_limit', help='Max connected peer per site', default=8, type=int, metavar='connected_limit')

|

||||

self.parser.add_argument('--global_connected_limit', help='Max connections', default=512, type=int, metavar='global_connected_limit')

|

||||

self.parser.add_argument('--connected_limit', help='Max number of connected peers per site. Soft limit.', default=10, type=int, metavar='connected_limit')

|

||||

self.parser.add_argument('--global_connected_limit', help='Max number of connections. Soft limit.', default=512, type=int, metavar='global_connected_limit')

|

||||

self.parser.add_argument('--workers', help='Download workers per site', default=5, type=int, metavar='workers')

|

||||

|

||||

self.parser.add_argument('--site_announce_interval_min', help='Site announce interval for the most active sites, in minutes.', default=4, type=int, metavar='site_announce_interval_min')

|

||||

self.parser.add_argument('--site_announce_interval_max', help='Site announce interval for inactive sites, in minutes.', default=30, type=int, metavar='site_announce_interval_max')

|

||||

|

||||

self.parser.add_argument('--site_peer_check_interval_min', help='Connectable peers check interval for the most active sites, in minutes.', default=5, type=int, metavar='site_peer_check_interval_min')

|

||||

self.parser.add_argument('--site_peer_check_interval_max', help='Connectable peers check interval for inactive sites, in minutes.', default=20, type=int, metavar='site_peer_check_interval_max')

|

||||

|

||||

self.parser.add_argument('--site_update_check_interval_min', help='Site update check interval for the most active sites, in minutes.', default=5, type=int, metavar='site_update_check_interval_min')

|

||||

self.parser.add_argument('--site_update_check_interval_max', help='Site update check interval for inactive sites, in minutes.', default=45, type=int, metavar='site_update_check_interval_max')

|

||||

|

||||

self.parser.add_argument('--site_connectable_peer_count_max', help='Search for as many connectable peers for the most active sites', default=10, type=int, metavar='site_connectable_peer_count_max')

|

||||

self.parser.add_argument('--site_connectable_peer_count_min', help='Search for as many connectable peers for inactive sites', default=2, type=int, metavar='site_connectable_peer_count_min')

|

||||

|

||||

self.parser.add_argument('--send_back_lru_size', help='Size of the send back LRU cache', default=5000, type=int, metavar='send_back_lru_size')

|

||||

self.parser.add_argument('--send_back_limit', help='Send no more than so many files at once back to peer, when we discovered that the peer held older file versions', default=3, type=int, metavar='send_back_limit')

|

||||

|

||||

self.parser.add_argument('--expose_no_ownership', help='By default, ZeroNet tries checking updates for own sites more frequently. This can be used by a third party for revealing the network addresses of a site owner. If this option is enabled, ZeroNet performs the checks in the same way for any sites.', type='bool', choices=[True, False], default=False)

|

||||

|

||||

self.parser.add_argument('--simultaneous_connection_throttle_threshold', help='Throttle opening new connections when the number of outgoing connections in not fully established state exceeds the threshold.', default=15, type=int, metavar='simultaneous_connection_throttle_threshold')

|

||||

|

||||

self.parser.add_argument('--fileserver_ip', help='FileServer bind address', default="*", metavar='ip')

|

||||

self.parser.add_argument('--fileserver_port', help='FileServer bind port (0: randomize)', default=0, type=int, metavar='port')

|

||||

self.parser.add_argument('--fileserver_port_range', help='FileServer randomization range', default="10000-40000", metavar='port')

|

||||

|

|

@ -319,7 +371,8 @@ class Config(object):

|

|||

|

||||

def loadTrackersFile(self):

|

||||

if not self.trackers_file:

|

||||

self.trackers_file = ["trackers.txt", "{data_dir}/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d/trackers.txt"]

|

||||

return None

|

||||

|

||||

self.trackers = self.arguments.trackers[:]

|

||||

|

||||

for trackers_file in self.trackers_file:

|

||||

|

|

@ -331,9 +384,6 @@ class Config(object):

|

|||

else: # Relative to zeronet.py

|

||||

trackers_file_path = self.start_dir + "/" + trackers_file

|

||||

|

||||

if not os.path.exists(trackers_file_path):

|

||||

continue

|

||||

|

||||

for line in open(trackers_file_path):

|

||||

tracker = line.strip()

|

||||

if "://" in tracker and tracker not in self.trackers:

|

||||

|

|

|

|||

|

|

@ -17,12 +17,13 @@ from util import helper

|

|||

class Connection(object):

|

||||

__slots__ = (

|

||||

"sock", "sock_wrapped", "ip", "port", "cert_pin", "target_onion", "id", "protocol", "type", "server", "unpacker", "unpacker_bytes", "req_id", "ip_type",

|

||||

"handshake", "crypt", "connected", "event_connected", "closed", "start_time", "handshake_time", "last_recv_time", "is_private_ip", "is_tracker_connection",

|

||||

"handshake", "crypt", "connected", "connecting", "event_connected", "closed", "start_time", "handshake_time", "last_recv_time", "is_private_ip", "is_tracker_connection",

|

||||

"last_message_time", "last_send_time", "last_sent_time", "incomplete_buff_recv", "bytes_recv", "bytes_sent", "cpu_time", "send_lock",

|

||||

"last_ping_delay", "last_req_time", "last_cmd_sent", "last_cmd_recv", "bad_actions", "sites", "name", "waiting_requests", "waiting_streams"

|

||||

)

|

||||

|

||||

def __init__(self, server, ip, port, sock=None, target_onion=None, is_tracker_connection=False):

|

||||

self.server = server

|

||||

self.sock = sock

|

||||

self.cert_pin = None

|

||||

if "#" in ip:

|

||||

|

|

@ -42,7 +43,6 @@ class Connection(object):

|

|||

self.is_private_ip = False

|

||||

self.is_tracker_connection = is_tracker_connection

|

||||

|

||||

self.server = server

|

||||

self.unpacker = None # Stream incoming socket messages here

|

||||

self.unpacker_bytes = 0 # How many bytes the unpacker received

|

||||

self.req_id = 0 # Last request id

|

||||

|

|

@ -50,6 +50,7 @@ class Connection(object):

|

|||

self.crypt = None # Connection encryption method

|

||||

self.sock_wrapped = False # Socket wrapped to encryption

|

||||

|

||||

self.connecting = False

|

||||

self.connected = False

|

||||

self.event_connected = gevent.event.AsyncResult() # Solves on handshake received

|

||||

self.closed = False

|

||||

|

|

@ -81,11 +82,11 @@ class Connection(object):

|

|||

|

||||

def setIp(self, ip):

|

||||

self.ip = ip

|

||||

self.ip_type = helper.getIpType(ip)

|

||||

self.ip_type = self.server.getIpType(ip)

|

||||

self.updateName()

|

||||

|

||||

def createSocket(self):

|

||||

if helper.getIpType(self.ip) == "ipv6" and not hasattr(socket, "socket_noproxy"):

|

||||

if self.server.getIpType(self.ip) == "ipv6" and not hasattr(socket, "socket_noproxy"):

|

||||