Site blocked

-This site is on your blocklist:

-

-

-

-Too much image

- on 2015-01-25 12:32:11

-  ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

-### [Gentoo Linux](https://www.gentoo.org)

+### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

-* [`layman -a raiagent`](https://github.com/leycec/raiagent)

-* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

-* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

+- Для работы требуется Android как минимум версии 4.1 Jelly Bean

+- [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

-### [Gentoo Linux](https://www.gentoo.org)

+### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

-* [`layman -a raiagent`](https://github.com/leycec/raiagent)

-* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

-* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

+- Для работы требуется Android как минимум версии 4.1 Jelly Bean

+- [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

+### Установка из исходного кода

-### [FreeBSD](https://www.freebsd.org/)

-

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Подключитесь к VM с помощью `vagrant ssh`

-* `cd /vagrant`

-* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

+### Установка из исходного кода

-### [FreeBSD](https://www.freebsd.org/)

-

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Подключитесь к VM с помощью `vagrant ssh`

-* `cd /vagrant`

-* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v  ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

-* `pkg install zeronet` 或者 `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* 通过 `vagrant ssh` 连接到 VM

-* `cd /vagrant`

-* 运行 `python2 zeronet.py --ui_ip 0.0.0.0`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

-* `pkg install zeronet` 或者 `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* 通过 `vagrant ssh` 连接到 VM

-* `cd /vagrant`

-* 运行 `python2 zeronet.py --ui_ip 0.0.0.0`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v  ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

## 现有限制

-* ~~没有类似于 BitTorrent 的文件拆分来支持大文件~~ (已添加大文件支持)

-* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

-* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

+* 传输文件时没有压缩

* 不支持私有站点

-## 如何创建一个 ZeroNet 站点?

+## 如何创建一个 ZeroNet 站点?

+ * 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

+ * 您将被**重定向**到一个全新的站点,该站点只能由您修改

+ * 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

+ * 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

-如果 zeronet 在运行,把它关掉

-执行:

-```bash

-$ zeronet.py siteCreate

-...

-- Site private key: 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

-- Site address: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-- Site created!

-$ zeronet.py

-...

-```

-

-你已经完成了! 现在任何人都可以通过

-`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

-来访问你的站点

-

-下一步: [ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

-

-

-## 我要如何修改 ZeroNet 站点?

-

-* 修改位于 data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 的目录.

- 在你改好之后:

-

-```bash

-$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-- Signing site: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

-Private key (input hidden):

-```

-

-* 输入你在创建站点时获得的私钥

-

-```bash

-$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-Site:13DNDk..bhC2 Publishing to 3/10 peers...

-Site:13DNDk..bhC2 Successfuly published to 3 peers

-- Serving files....

-```

-

-* 就是这样! 你现在已经成功的签名并推送了你的更改。

-

+接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## 帮助这个项目

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-### 赞助商

+#### 感谢您!

-* 在 OSX/Safari 下 [BrowserStack.com](https://www.browserstack.com) 带来更好的兼容性

-

-#### 感谢!

-

-* 更多信息, 帮助, 变更记录和 zeronet 站点: https://www.reddit.com/r/zeronet/

-* 在: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 和我们聊天,或者使用 [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

-* Email: hello@noloop.me

+* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

+* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

+* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

+* Email: canews.in@gmail.com

diff --git a/README.md b/README.md

index 07d09ddb..70b79adc 100644

--- a/README.md

+++ b/README.md

@@ -1,9 +1,6 @@

-# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)

-

-[简体中文](./README-zh-cn.md)

-[Русский](./README-ru.md)

-

-Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io

+# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

+

+Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

## Why?

@@ -36,22 +33,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* After starting `zeronet.py` you will be able to visit zeronet sites using

`http://127.0.0.1:43110/{zeronet_address}` (eg.

- `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

+ `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

network so it can download the site files (html, css, js...) from them.

* Each visited site is also served by you.

* Every site contains a `content.json` file which holds all other files in a sha512 hash

and a signature generated using the site's private key.

* If the site owner (who has the private key for the site address) modifies the

- site, then he/she signs the new `content.json` and publishes it to the peers.

+ site and signs the new `content.json` and publishes it to the peers.

Afterwards, the peers verify the `content.json` integrity (using the

signature), they download the modified files and publish the new content to

other peers.

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

-#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

+#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

-#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

+#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

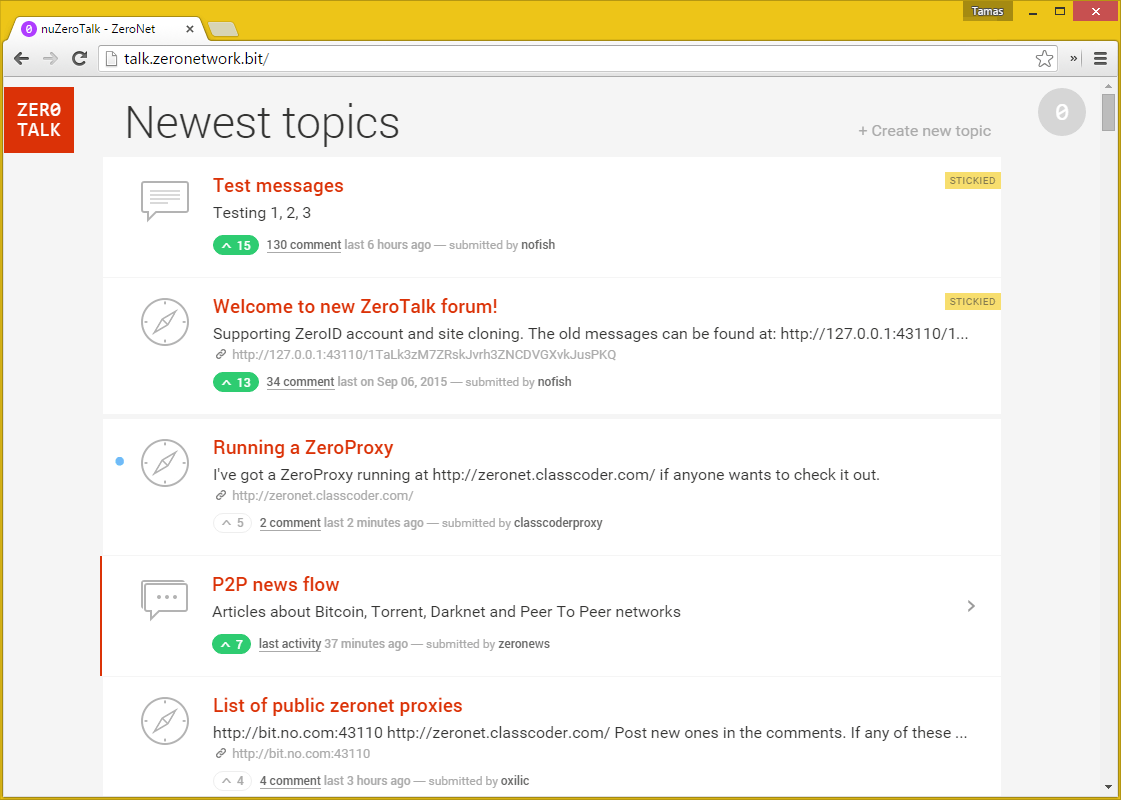

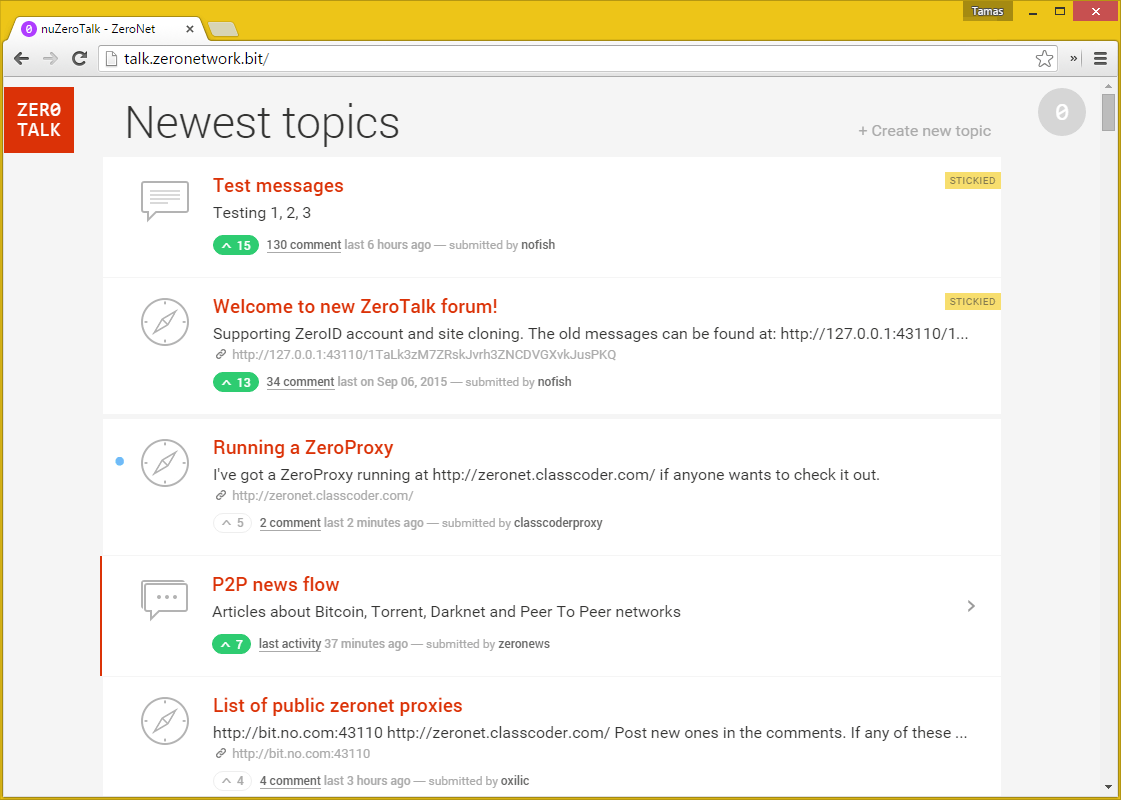

## Screenshots

@@ -59,163 +56,101 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

-#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

+#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

## How to join

-* Download ZeroBundle package:

- * [Microsoft Windows](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist/ZeroNet-win.zip)

- * [Apple macOS](https://github.com/HelloZeroNet/ZeroNet-mac/archive/dist/ZeroNet-mac.zip)

- * [Linux x86/64-bit](https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux64.tar.gz)

- * [Linux x86/32-bit](https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux32.tar.gz)

-* Unpack anywhere

-* Run `ZeroNet.exe` (win), `ZeroNet(.app)` (osx), `ZeroNet.sh` (linux)

+### Windows

-### Linux terminal on x86-64

+ - Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

+ - Unpack anywhere

+ - Run `ZeroNet.exe`

+

+### macOS

-* `wget https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux64.tar.gz`

-* `tar xvpfz ZeroBundle-linux64.tar.gz`

-* `cd ZeroBundle`

-* Start with `./ZeroNet.sh`

+ - Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

+ - Unpack anywhere

+ - Run `ZeroNet.app`

+

+### Linux (x86-64bit)

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

+ - `unzip ZeroNet-linux.zip`

+ - `cd ZeroNet-linux`

+ - Start with: `./ZeroNet.sh`

+ - Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

+

+ __Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

+

+ ### Android (arm, arm64, x86)

+ - minimum Android version supported 21 (Android 5.0 Lollipop)

+ - [

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

## 现有限制

-* ~~没有类似于 BitTorrent 的文件拆分来支持大文件~~ (已添加大文件支持)

-* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

-* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

+* 传输文件时没有压缩

* 不支持私有站点

-## 如何创建一个 ZeroNet 站点?

+## 如何创建一个 ZeroNet 站点?

+ * 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

+ * 您将被**重定向**到一个全新的站点,该站点只能由您修改

+ * 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

+ * 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

-如果 zeronet 在运行,把它关掉

-执行:

-```bash

-$ zeronet.py siteCreate

-...

-- Site private key: 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

-- Site address: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-- Site created!

-$ zeronet.py

-...

-```

-

-你已经完成了! 现在任何人都可以通过

-`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

-来访问你的站点

-

-下一步: [ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

-

-

-## 我要如何修改 ZeroNet 站点?

-

-* 修改位于 data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 的目录.

- 在你改好之后:

-

-```bash

-$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-- Signing site: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

-Private key (input hidden):

-```

-

-* 输入你在创建站点时获得的私钥

-

-```bash

-$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-Site:13DNDk..bhC2 Publishing to 3/10 peers...

-Site:13DNDk..bhC2 Successfuly published to 3 peers

-- Serving files....

-```

-

-* 就是这样! 你现在已经成功的签名并推送了你的更改。

-

+接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## 帮助这个项目

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-### 赞助商

+#### 感谢您!

-* 在 OSX/Safari 下 [BrowserStack.com](https://www.browserstack.com) 带来更好的兼容性

-

-#### 感谢!

-

-* 更多信息, 帮助, 变更记录和 zeronet 站点: https://www.reddit.com/r/zeronet/

-* 在: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 和我们聊天,或者使用 [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

-* Email: hello@noloop.me

+* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

+* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

+* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

+* Email: canews.in@gmail.com

diff --git a/README.md b/README.md

index 07d09ddb..70b79adc 100644

--- a/README.md

+++ b/README.md

@@ -1,9 +1,6 @@

-# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)

-

-[简体中文](./README-zh-cn.md)

-[Русский](./README-ru.md)

-

-Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io

+# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

+

+Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

## Why?

@@ -36,22 +33,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* After starting `zeronet.py` you will be able to visit zeronet sites using

`http://127.0.0.1:43110/{zeronet_address}` (eg.

- `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

+ `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

network so it can download the site files (html, css, js...) from them.

* Each visited site is also served by you.

* Every site contains a `content.json` file which holds all other files in a sha512 hash

and a signature generated using the site's private key.

* If the site owner (who has the private key for the site address) modifies the

- site, then he/she signs the new `content.json` and publishes it to the peers.

+ site and signs the new `content.json` and publishes it to the peers.

Afterwards, the peers verify the `content.json` integrity (using the

signature), they download the modified files and publish the new content to

other peers.

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

-#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

+#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

-#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

+#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## Screenshots

@@ -59,163 +56,101 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

-#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

+#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

## How to join

-* Download ZeroBundle package:

- * [Microsoft Windows](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist/ZeroNet-win.zip)

- * [Apple macOS](https://github.com/HelloZeroNet/ZeroNet-mac/archive/dist/ZeroNet-mac.zip)

- * [Linux x86/64-bit](https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux64.tar.gz)

- * [Linux x86/32-bit](https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux32.tar.gz)

-* Unpack anywhere

-* Run `ZeroNet.exe` (win), `ZeroNet(.app)` (osx), `ZeroNet.sh` (linux)

+### Windows

-### Linux terminal on x86-64

+ - Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

+ - Unpack anywhere

+ - Run `ZeroNet.exe`

+

+### macOS

-* `wget https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux64.tar.gz`

-* `tar xvpfz ZeroBundle-linux64.tar.gz`

-* `cd ZeroBundle`

-* Start with `./ZeroNet.sh`

+ - Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

+ - Unpack anywhere

+ - Run `ZeroNet.app`

+

+### Linux (x86-64bit)

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

+ - `unzip ZeroNet-linux.zip`

+ - `cd ZeroNet-linux`

+ - Start with: `./ZeroNet.sh`

+ - Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

+

+ __Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

+

+ ### Android (arm, arm64, x86)

+ - minimum Android version supported 21 (Android 5.0 Lollipop)

+ - [ ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

-It downloads the latest version of ZeroNet then starts it automatically.

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

-It downloads the latest version of ZeroNet then starts it automatically.

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-#### Manual install for Debian Linux

-* `sudo apt-get update`

-* `sudo apt-get install msgpack-python python-gevent`

-* `wget https://github.com/HelloZeroNet/ZeroNet/archive/master.tar.gz`

-* `tar xvpfz master.tar.gz`

-* `cd ZeroNet-master`

-* Start with `python2 zeronet.py`

-* Open http://127.0.0.1:43110/ in your browser

+#### Docker

+There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

-### [Whonix](https://www.whonix.org)

+### Online Proxies

+Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

-* [Instructions](https://www.whonix.org/wiki/ZeroNet)

+#### Official ZNX Proxy :

-### [Arch Linux](https://www.archlinux.org)

+https://proxy.zeronet.dev/

-* `git clone https://aur.archlinux.org/zeronet.git`

-* `cd zeronet`

-* `makepkg -srci`

-* `systemctl start zeronet`

-* Open http://127.0.0.1:43110/ in your browser

+https://zeronet.dev/

-See [ArchWiki](https://wiki.archlinux.org)'s [ZeroNet

-article](https://wiki.archlinux.org/index.php/ZeroNet) for further assistance.

+#### From Community

-### [Gentoo Linux](https://www.gentoo.org)

+https://0net-preview.com/

-* [`eselect repository enable raiagent`](https://github.com/leycec/raiagent)

-* `emerge --sync`

-* `echo 'net-vpn/zeronet' >> /etc/portage/package.accept_keywords`

-* *(Optional)* Enable Tor support: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* *(Optional)* Enable zeronet at runlevel "default": `rc-update add zeronet`

-* Open http://127.0.0.1:43110/ in your browser

+https://portal.ngnoid.tv/

-See `/usr/share/doc/zeronet-*/README.gentoo.bz2` for further assistance.

+https://zeronet.ipfsscan.io/

-### [FreeBSD](https://www.freebsd.org/)

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Open http://127.0.0.1:43110/ in your browser

+### Install from source

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Access VM with `vagrant ssh`

-* `cd /vagrant`

-* Run `python2 zeronet.py --ui_ip 0.0.0.0`

-* Open http://127.0.0.1:43110/ in your browser

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-#### Manual install for Debian Linux

-* `sudo apt-get update`

-* `sudo apt-get install msgpack-python python-gevent`

-* `wget https://github.com/HelloZeroNet/ZeroNet/archive/master.tar.gz`

-* `tar xvpfz master.tar.gz`

-* `cd ZeroNet-master`

-* Start with `python2 zeronet.py`

-* Open http://127.0.0.1:43110/ in your browser

+#### Docker

+There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

-### [Whonix](https://www.whonix.org)

+### Online Proxies

+Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

-* [Instructions](https://www.whonix.org/wiki/ZeroNet)

+#### Official ZNX Proxy :

-### [Arch Linux](https://www.archlinux.org)

+https://proxy.zeronet.dev/

-* `git clone https://aur.archlinux.org/zeronet.git`

-* `cd zeronet`

-* `makepkg -srci`

-* `systemctl start zeronet`

-* Open http://127.0.0.1:43110/ in your browser

+https://zeronet.dev/

-See [ArchWiki](https://wiki.archlinux.org)'s [ZeroNet

-article](https://wiki.archlinux.org/index.php/ZeroNet) for further assistance.

+#### From Community

-### [Gentoo Linux](https://www.gentoo.org)

+https://0net-preview.com/

-* [`eselect repository enable raiagent`](https://github.com/leycec/raiagent)

-* `emerge --sync`

-* `echo 'net-vpn/zeronet' >> /etc/portage/package.accept_keywords`

-* *(Optional)* Enable Tor support: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* *(Optional)* Enable zeronet at runlevel "default": `rc-update add zeronet`

-* Open http://127.0.0.1:43110/ in your browser

+https://portal.ngnoid.tv/

-See `/usr/share/doc/zeronet-*/README.gentoo.bz2` for further assistance.

+https://zeronet.ipfsscan.io/

-### [FreeBSD](https://www.freebsd.org/)

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Open http://127.0.0.1:43110/ in your browser

+### Install from source

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Access VM with `vagrant ssh`

-* `cd /vagrant`

-* Run `python2 zeronet.py --ui_ip 0.0.0.0`

-* Open http://127.0.0.1:43110/ in your browser

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v