Site blocked

-This site is on your blocklist:

-

-

-

-Too much image

- on 2015-01-25 12:32:11

-  ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

-### [Gentoo Linux](https://www.gentoo.org)

+### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

-* [`layman -a raiagent`](https://github.com/leycec/raiagent)

-* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

-* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

+- Для работы требуется Android как минимум версии 4.1 Jelly Bean

+- [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

-### [Gentoo Linux](https://www.gentoo.org)

+### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

-* [`layman -a raiagent`](https://github.com/leycec/raiagent)

-* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

-* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

+- Для работы требуется Android как минимум версии 4.1 Jelly Bean

+- [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

+### Установка из исходного кода

-### [FreeBSD](https://www.freebsd.org/)

-

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Подключитесь к VM с помощью `vagrant ssh`

-* `cd /vagrant`

-* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

+### Установка из исходного кода

-### [FreeBSD](https://www.freebsd.org/)

-

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Подключитесь к VM с помощью `vagrant ssh`

-* `cd /vagrant`

-* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v  ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

-* `pkg install zeronet` 或者 `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* 通过 `vagrant ssh` 连接到 VM

-* `cd /vagrant`

-* 运行 `python2 zeronet.py --ui_ip 0.0.0.0`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

-* `pkg install zeronet` 或者 `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* 通过 `vagrant ssh` 连接到 VM

-* `cd /vagrant`

-* 运行 `python2 zeronet.py --ui_ip 0.0.0.0`

-* 在你的浏览器中打开 http://127.0.0.1:43110/

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v  ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

## 现有限制

-* ~~没有类似于 BitTorrent 的文件拆分来支持大文件~~ (已添加大文件支持)

-* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

-* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

+* 传输文件时没有压缩

* 不支持私有站点

-## 如何创建一个 ZeroNet 站点?

+## 如何创建一个 ZeroNet 站点?

+ * 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

+ * 您将被**重定向**到一个全新的站点,该站点只能由您修改

+ * 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

+ * 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

-如果 zeronet 在运行,把它关掉

-执行:

-```bash

-$ zeronet.py siteCreate

-...

-- Site private key: 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

-- Site address: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-- Site created!

-$ zeronet.py

-...

-```

-

-你已经完成了! 现在任何人都可以通过

-`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

-来访问你的站点

-

-下一步: [ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

-

-

-## 我要如何修改 ZeroNet 站点?

-

-* 修改位于 data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 的目录.

- 在你改好之后:

-

-```bash

-$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-- Signing site: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

-Private key (input hidden):

-```

-

-* 输入你在创建站点时获得的私钥

-

-```bash

-$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-Site:13DNDk..bhC2 Publishing to 3/10 peers...

-Site:13DNDk..bhC2 Successfuly published to 3 peers

-- Serving files....

-```

-

-* 就是这样! 你现在已经成功的签名并推送了你的更改。

-

+接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## 帮助这个项目

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-### 赞助商

+#### 感谢您!

-* 在 OSX/Safari 下 [BrowserStack.com](https://www.browserstack.com) 带来更好的兼容性

-

-#### 感谢!

-

-* 更多信息, 帮助, 变更记录和 zeronet 站点: https://www.reddit.com/r/zeronet/

-* 在: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 和我们聊天,或者使用 [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

-* Email: hello@noloop.me

+* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

+* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

+* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

+* Email: canews.in@gmail.com

diff --git a/README.md b/README.md

index 708116e3..70b79adc 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,6 @@

-__Warning: Development test version, do not use on live data__

-

-# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)

-

-Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io

+# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

+

+Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

## Why?

@@ -35,22 +33,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* After starting `zeronet.py` you will be able to visit zeronet sites using

`http://127.0.0.1:43110/{zeronet_address}` (eg.

- `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

+ `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

network so it can download the site files (html, css, js...) from them.

* Each visited site is also served by you.

* Every site contains a `content.json` file which holds all other files in a sha512 hash

and a signature generated using the site's private key.

* If the site owner (who has the private key for the site address) modifies the

- site, then he/she signs the new `content.json` and publishes it to the peers.

+ site and signs the new `content.json` and publishes it to the peers.

Afterwards, the peers verify the `content.json` integrity (using the

signature), they download the modified files and publish the new content to

other peers.

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

-#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

+#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

-#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

+#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

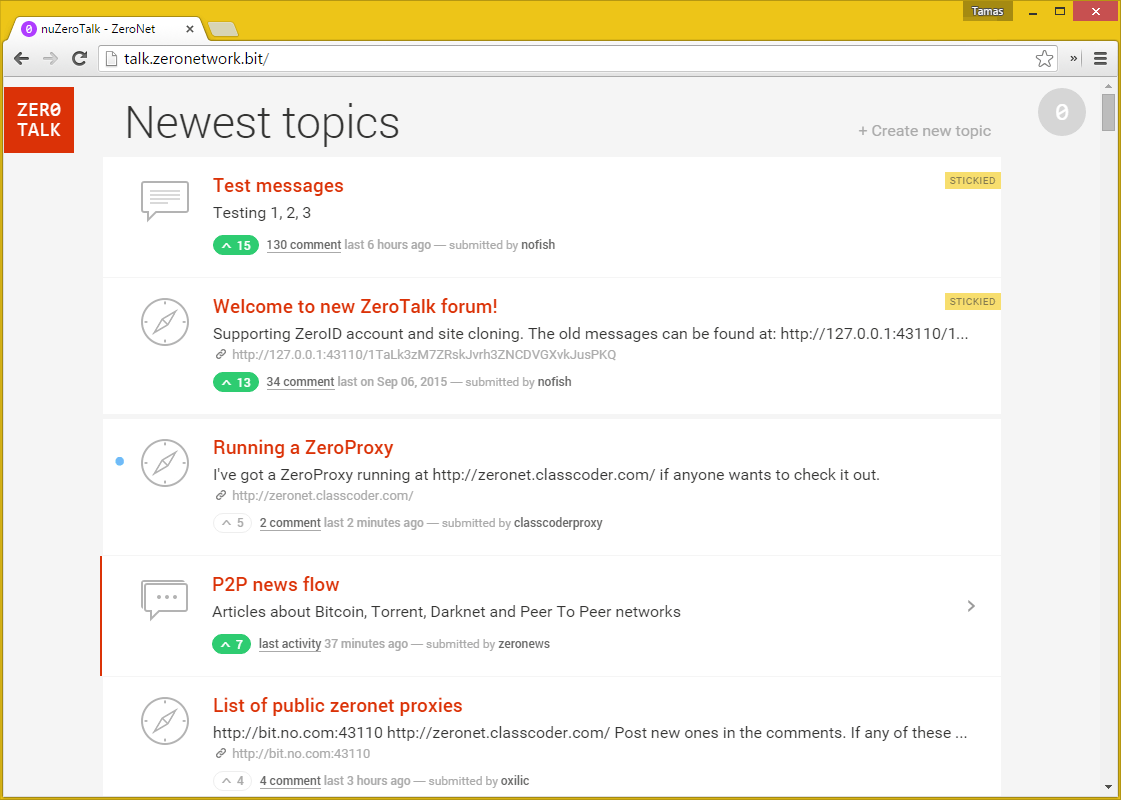

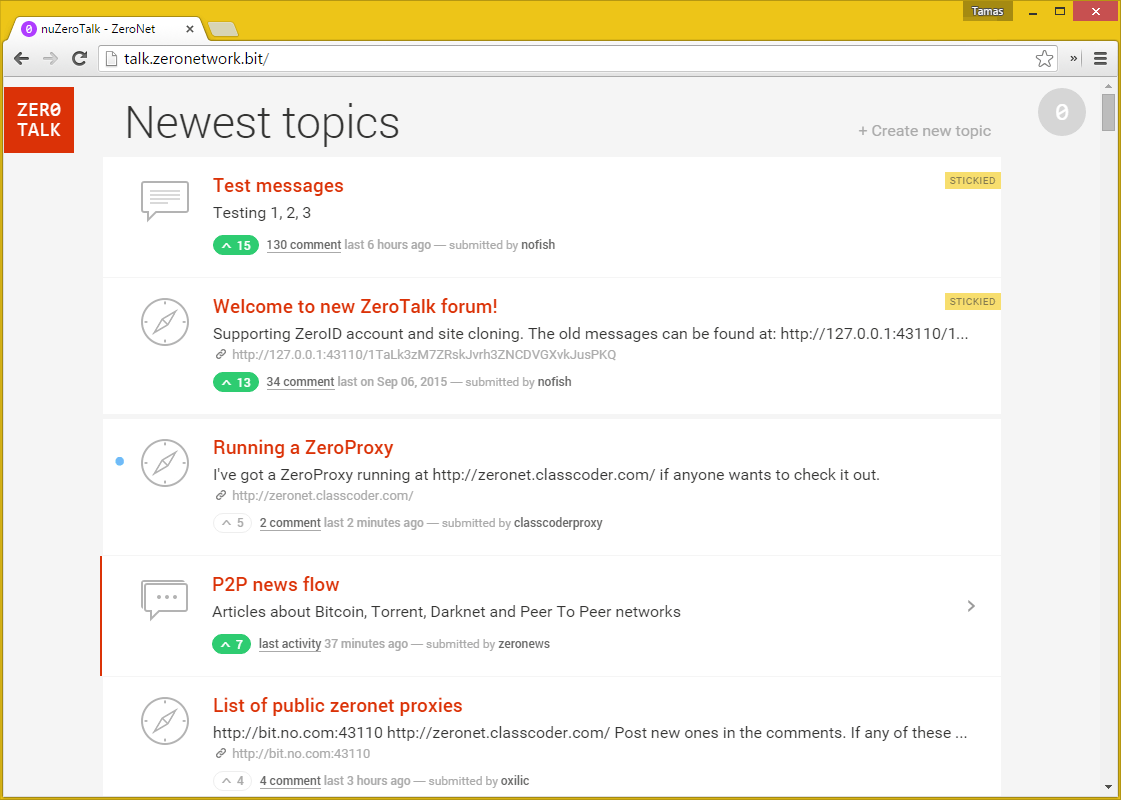

## Screenshots

@@ -58,116 +56,101 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

-#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

+#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

## How to join

-### Install from package for your distribution

+### Windows

+

+ - Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

+ - Unpack anywhere

+ - Run `ZeroNet.exe`

+

+### macOS

+

+ - Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

+ - Unpack anywhere

+ - Run `ZeroNet.app`

+

+### Linux (x86-64bit)

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

+ - `unzip ZeroNet-linux.zip`

+ - `cd ZeroNet-linux`

+ - Start with: `./ZeroNet.sh`

+ - Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

+

+ __Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

+

+ ### Android (arm, arm64, x86)

+ - minimum Android version supported 21 (Android 5.0 Lollipop)

+ - [

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

## 现有限制

-* ~~没有类似于 BitTorrent 的文件拆分来支持大文件~~ (已添加大文件支持)

-* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

-* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

+* 传输文件时没有压缩

* 不支持私有站点

-## 如何创建一个 ZeroNet 站点?

+## 如何创建一个 ZeroNet 站点?

+ * 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

+ * 您将被**重定向**到一个全新的站点,该站点只能由您修改

+ * 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

+ * 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

-如果 zeronet 在运行,把它关掉

-执行:

-```bash

-$ zeronet.py siteCreate

-...

-- Site private key: 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

-- Site address: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-- Site created!

-$ zeronet.py

-...

-```

-

-你已经完成了! 现在任何人都可以通过

-`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

-来访问你的站点

-

-下一步: [ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

-

-

-## 我要如何修改 ZeroNet 站点?

-

-* 修改位于 data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 的目录.

- 在你改好之后:

-

-```bash

-$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-- Signing site: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

-Private key (input hidden):

-```

-

-* 输入你在创建站点时获得的私钥

-

-```bash

-$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-Site:13DNDk..bhC2 Publishing to 3/10 peers...

-Site:13DNDk..bhC2 Successfuly published to 3 peers

-- Serving files....

-```

-

-* 就是这样! 你现在已经成功的签名并推送了你的更改。

-

+接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## 帮助这个项目

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-### 赞助商

+#### 感谢您!

-* 在 OSX/Safari 下 [BrowserStack.com](https://www.browserstack.com) 带来更好的兼容性

-

-#### 感谢!

-

-* 更多信息, 帮助, 变更记录和 zeronet 站点: https://www.reddit.com/r/zeronet/

-* 在: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 和我们聊天,或者使用 [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

-* Email: hello@noloop.me

+* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

+* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

+* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

+* Email: canews.in@gmail.com

diff --git a/README.md b/README.md

index 708116e3..70b79adc 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,6 @@

-__Warning: Development test version, do not use on live data__

-

-# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)

-

-Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io

+# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

+

+Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

## Why?

@@ -35,22 +33,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* After starting `zeronet.py` you will be able to visit zeronet sites using

`http://127.0.0.1:43110/{zeronet_address}` (eg.

- `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

+ `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

network so it can download the site files (html, css, js...) from them.

* Each visited site is also served by you.

* Every site contains a `content.json` file which holds all other files in a sha512 hash

and a signature generated using the site's private key.

* If the site owner (who has the private key for the site address) modifies the

- site, then he/she signs the new `content.json` and publishes it to the peers.

+ site and signs the new `content.json` and publishes it to the peers.

Afterwards, the peers verify the `content.json` integrity (using the

signature), they download the modified files and publish the new content to

other peers.

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

-#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

+#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

-#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

+#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## Screenshots

@@ -58,116 +56,101 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

-#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

+#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

## How to join

-### Install from package for your distribution

+### Windows

+

+ - Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

+ - Unpack anywhere

+ - Run `ZeroNet.exe`

+

+### macOS

+

+ - Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

+ - Unpack anywhere

+ - Run `ZeroNet.app`

+

+### Linux (x86-64bit)

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

+ - `unzip ZeroNet-linux.zip`

+ - `cd ZeroNet-linux`

+ - Start with: `./ZeroNet.sh`

+ - Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

+

+ __Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

+

+ ### Android (arm, arm64, x86)

+ - minimum Android version supported 21 (Android 5.0 Lollipop)

+ - [ ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

+

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

+

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

+

+

+#### Docker

+There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

+

+### Online Proxies

+Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

+

+#### Official ZNX Proxy :

+

+https://proxy.zeronet.dev/

+

+https://zeronet.dev/

+

+#### From Community

+

+https://0net-preview.com/

+

+https://portal.ngnoid.tv/

+

+https://zeronet.ipfsscan.io/

-* Arch Linux: [zeronet](https://aur.archlinux.org/zeronet.git), [zeronet-git](https://aur.archlinux.org/zeronet-git.git)

-* Gentoo: [emerge repository](https://github.com/leycec/raiagent)

-* FreeBSD: zeronet

-* Whonix: [instructions](https://www.whonix.org/wiki/ZeroNet)

### Install from source

-Fetch and extract the source:

-

- wget https://github.com/HelloZeroNet/ZeroNet/archive/py3.tar.gz

- tar xvpfz py3.tar.gz

- cd ZeroNet-py3

-

-Install Python module dependencies either:

-

-* (Option A) into a [virtual env](https://virtualenv.readthedocs.org/en/latest/)

-

- ```

- virtualenv zeronet

- source zeronet/bin/activate

- python -m pip install -r requirements.txt

- ```

-

-* (Option B) into the system (requires root), for example, on Debian/Ubuntu:

-

- ```

- sudo apt-get update

- sudo apt-get install python3-pip

- sudo python3 -m pip install -r requirements.txt

- ```

-

-Start Zeronet:

-

- python3 zeronet.py

-

-Open the ZeroHello landing page in your browser by navigating to:

-

- http://127.0.0.1:43110/

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

+ - `unzip ZeroNet-src.zip`

+ - `cd ZeroNet`

+ - `sudo apt-get update`

+ - `sudo apt-get install python3-pip`

+ - `sudo python3 -m pip install -r requirements.txt`

+ - Start with: `python3 zeronet.py`

+ - Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

## Current limitations

-* ~~No torrent-like file splitting for big file support~~ (big file support added)

-* ~~No more anonymous than Bittorrent~~ (built-in full Tor support added)

-* File transactions are not compressed ~~or encrypted yet~~ (TLS encryption added)

+* File transactions are not compressed

* No private sites

## How can I create a ZeroNet site?

-Shut down zeronet if you are running it already

-

-```bash

-$ zeronet.py siteCreate

-...

-- Site private key: 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

-- Site address: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-- Site created!

-$ zeronet.py

-...

-```

-

-Congratulations, you're finished! Now anyone can access your site using

-`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

-

-Next steps: [ZeroNet Developer Documentation](https://zeronet.io/docs/site_development/getting_started/)

-

-

-## How can I modify a ZeroNet site?

-

-* Modify files located in data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 directory.

- After you're finished:

-

-```bash

-$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-- Signing site: 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

-Private key (input hidden):

-```

-

-* Enter the private key you got when you created the site, then:

-

-```bash

-$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

-...

-Site:13DNDk..bhC2 Publishing to 3/10 peers...

-Site:13DNDk..bhC2 Successfuly published to 3 peers

-- Serving files....

-```

-

-* That's it! You've successfully signed and published your modifications.

+ * Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d).

+ * You will be **redirected** to a completely new site that is only modifiable by you!

+ * You can find and modify your site's content in **data/[yoursiteaddress]** directory

+ * After the modifications open your site, drag the topright "0" button to left, then press **sign** and **publish** buttons on the bottom

+Next steps: [ZeroNet Developer Documentation](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## Help keep this project alive

-

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-

-### Sponsors

-

-* Better macOS/Safari compatibility made possible by [BrowserStack.com](https://www.browserstack.com)

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

#### Thank you!

-* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronet/

-* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* Email: hello@zeronet.io (PGP: CB9613AE)

+* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronetx/

+* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/canewsin/ZeroNet)

+* Email: canews.in@gmail.com

diff --git a/plugins b/plugins

new file mode 160000

index 00000000..689d9309

--- /dev/null

+++ b/plugins

@@ -0,0 +1 @@

+Subproject commit 689d9309f73371f4681191b125ec3f2e14075eeb

diff --git a/plugins/AnnounceLocal/AnnounceLocalPlugin.py b/plugins/AnnounceLocal/AnnounceLocalPlugin.py

deleted file mode 100644

index 0919762a..00000000

--- a/plugins/AnnounceLocal/AnnounceLocalPlugin.py

+++ /dev/null

@@ -1,148 +0,0 @@

-import time

-

-import gevent

-

-from Plugin import PluginManager

-from Config import config

-from . import BroadcastServer

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def announce(self, force=False, *args, **kwargs):

- local_announcer = self.site.connection_server.local_announcer

-

- thread = None

- if local_announcer and (force or time.time() - local_announcer.last_discover > 5 * 60):

- thread = gevent.spawn(local_announcer.discover, force=force)

- back = super(SiteAnnouncerPlugin, self).announce(force=force, *args, **kwargs)

-

- if thread:

- thread.join()

-

- return back

-

-

-class LocalAnnouncer(BroadcastServer.BroadcastServer):

- def __init__(self, server, listen_port):

- super(LocalAnnouncer, self).__init__("zeronet", listen_port=listen_port)

- self.server = server

-

- self.sender_info["peer_id"] = self.server.peer_id

- self.sender_info["port"] = self.server.port

- self.sender_info["broadcast_port"] = listen_port

- self.sender_info["rev"] = config.rev

-

- self.known_peers = {}

- self.last_discover = 0

-

- def discover(self, force=False):

- self.log.debug("Sending discover request (force: %s)" % force)

- self.last_discover = time.time()

- if force: # Probably new site added, clean cache

- self.known_peers = {}

-

- for peer_id, known_peer in list(self.known_peers.items()):

- if time.time() - known_peer["found"] > 20 * 60:

- del(self.known_peers[peer_id])

- self.log.debug("Timeout, removing from known_peers: %s" % peer_id)

- self.broadcast({"cmd": "discoverRequest", "params": {}}, port=self.listen_port)

-

- def actionDiscoverRequest(self, sender, params):

- back = {

- "cmd": "discoverResponse",

- "params": {

- "sites_changed": self.server.site_manager.sites_changed

- }

- }

-

- if sender["peer_id"] not in self.known_peers:

- self.known_peers[sender["peer_id"]] = {"added": time.time(), "sites_changed": 0, "updated": 0, "found": time.time()}

- self.log.debug("Got discover request from unknown peer %s (%s), time to refresh known peers" % (sender["ip"], sender["peer_id"]))

- gevent.spawn_later(1.0, self.discover) # Let the response arrive first to the requester

-

- return back

-

- def actionDiscoverResponse(self, sender, params):

- if sender["peer_id"] in self.known_peers:

- self.known_peers[sender["peer_id"]]["found"] = time.time()

- if params["sites_changed"] != self.known_peers.get(sender["peer_id"], {}).get("sites_changed"):

- # Peer's site list changed, request the list of new sites

- return {"cmd": "siteListRequest"}

- else:

- # Peer's site list is the same

- for site in self.server.sites.values():

- peer = site.peers.get("%s:%s" % (sender["ip"], sender["port"]))

- if peer:

- peer.found("local")

-

- def actionSiteListRequest(self, sender, params):

- back = []

- sites = list(self.server.sites.values())

-

- # Split adresses to group of 100 to avoid UDP size limit

- site_groups = [sites[i:i + 100] for i in range(0, len(sites), 100)]

- for site_group in site_groups:

- res = {}

- res["sites_changed"] = self.server.site_manager.sites_changed

- res["sites"] = [site.address_hash for site in site_group]

- back.append({"cmd": "siteListResponse", "params": res})

- return back

-

- def actionSiteListResponse(self, sender, params):

- s = time.time()

- peer_sites = set(params["sites"])

- num_found = 0

- added_sites = []

- for site in self.server.sites.values():

- if site.address_hash in peer_sites:

- added = site.addPeer(sender["ip"], sender["port"], source="local")

- num_found += 1

- if added:

- site.worker_manager.onPeers()

- site.updateWebsocket(peers_added=1)

- added_sites.append(site)

-

- # Save sites changed value to avoid unnecessary site list download

- if sender["peer_id"] not in self.known_peers:

- self.known_peers[sender["peer_id"]] = {"added": time.time()}

-

- self.known_peers[sender["peer_id"]]["sites_changed"] = params["sites_changed"]

- self.known_peers[sender["peer_id"]]["updated"] = time.time()

- self.known_peers[sender["peer_id"]]["found"] = time.time()

-

- self.log.debug(

- "Tracker result: Discover from %s response parsed in %.3fs, found: %s added: %s of %s" %

- (sender["ip"], time.time() - s, num_found, added_sites, len(peer_sites))

- )

-

-

-@PluginManager.registerTo("FileServer")

-class FileServerPlugin(object):

- def __init__(self, *args, **kwargs):

- res = super(FileServerPlugin, self).__init__(*args, **kwargs)

- if config.broadcast_port and config.tor != "always" and not config.disable_udp:

- self.local_announcer = LocalAnnouncer(self, config.broadcast_port)

- else:

- self.local_announcer = None

- return res

-

- def start(self, *args, **kwargs):

- if self.local_announcer:

- gevent.spawn(self.local_announcer.start)

- return super(FileServerPlugin, self).start(*args, **kwargs)

-

- def stop(self):

- if self.local_announcer:

- self.local_announcer.stop()

- res = super(FileServerPlugin, self).stop()

- return res

-

-

-@PluginManager.registerTo("ConfigPlugin")

-class ConfigPlugin(object):

- def createArguments(self):

- group = self.parser.add_argument_group("AnnounceLocal plugin")

- group.add_argument('--broadcast_port', help='UDP broadcasting port for local peer discovery', default=1544, type=int, metavar='port')

-

- return super(ConfigPlugin, self).createArguments()

diff --git a/plugins/AnnounceLocal/BroadcastServer.py b/plugins/AnnounceLocal/BroadcastServer.py

deleted file mode 100644

index 74678896..00000000

--- a/plugins/AnnounceLocal/BroadcastServer.py

+++ /dev/null

@@ -1,139 +0,0 @@

-import socket

-import logging

-import time

-from contextlib import closing

-

-from Debug import Debug

-from util import UpnpPunch

-from util import Msgpack

-

-

-class BroadcastServer(object):

- def __init__(self, service_name, listen_port=1544, listen_ip=''):

- self.log = logging.getLogger("BroadcastServer")

- self.listen_port = listen_port

- self.listen_ip = listen_ip

-

- self.running = False

- self.sock = None

- self.sender_info = {"service": service_name}

-

- def createBroadcastSocket(self):

- sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_BROADCAST, 1)

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

- if hasattr(socket, 'SO_REUSEPORT'):

- try:

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEPORT, 1)

- except Exception as err:

- self.log.warning("Error setting SO_REUSEPORT: %s" % err)

-

- binded = False

- for retry in range(3):

- try:

- sock.bind((self.listen_ip, self.listen_port))

- binded = True

- break

- except Exception as err:

- self.log.error(

- "Socket bind to %s:%s error: %s, retry #%s" %

- (self.listen_ip, self.listen_port, Debug.formatException(err), retry)

- )

- time.sleep(retry)

-

- if binded:

- return sock

- else:

- return False

-

- def start(self): # Listens for discover requests

- self.sock = self.createBroadcastSocket()

- if not self.sock:

- self.log.error("Unable to listen on port %s" % self.listen_port)

- return

-

- self.log.debug("Started on port %s" % self.listen_port)

-

- self.running = True

-

- while self.running:

- try:

- data, addr = self.sock.recvfrom(8192)

- except Exception as err:

- if self.running:

- self.log.error("Listener receive error: %s" % err)

- continue

-

- if not self.running:

- break

-

- try:

- message = Msgpack.unpack(data)

- response_addr, message = self.handleMessage(addr, message)

- if message:

- self.send(response_addr, message)

- except Exception as err:

- self.log.error("Handlemessage error: %s" % Debug.formatException(err))

- self.log.debug("Stopped listening on port %s" % self.listen_port)

-

- def stop(self):

- self.log.debug("Stopping, socket: %s" % self.sock)

- self.running = False

- if self.sock:

- self.sock.close()

-

- def send(self, addr, message):

- if type(message) is not list:

- message = [message]

-

- for message_part in message:

- message_part["sender"] = self.sender_info

-

- self.log.debug("Send to %s: %s" % (addr, message_part["cmd"]))

- with closing(socket.socket(socket.AF_INET, socket.SOCK_DGRAM)) as sock:

- sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

- sock.sendto(Msgpack.pack(message_part), addr)

-

- def getMyIps(self):

- return UpnpPunch._get_local_ips()

-

- def broadcast(self, message, port=None):

- if not port:

- port = self.listen_port

-

- my_ips = self.getMyIps()

- addr = ("255.255.255.255", port)

-

- message["sender"] = self.sender_info

- self.log.debug("Broadcast using ips %s on port %s: %s" % (my_ips, port, message["cmd"]))

-

- for my_ip in my_ips:

- try:

- with closing(socket.socket(socket.AF_INET, socket.SOCK_DGRAM)) as sock:

- sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_BROADCAST, 1)

- sock.bind((my_ip, 0))

- sock.sendto(Msgpack.pack(message), addr)

- except Exception as err:

- self.log.warning("Error sending broadcast using ip %s: %s" % (my_ip, err))

-

- def handleMessage(self, addr, message):

- self.log.debug("Got from %s: %s" % (addr, message["cmd"]))

- cmd = message["cmd"]

- params = message.get("params", {})

- sender = message["sender"]

- sender["ip"] = addr[0]

-

- func_name = "action" + cmd[0].upper() + cmd[1:]

- func = getattr(self, func_name, None)

-

- if sender["service"] != "zeronet" or sender["peer_id"] == self.sender_info["peer_id"]:

- # Skip messages not for us or sent by us

- message = None

- elif func:

- message = func(sender, params)

- else:

- self.log.debug("Unknown cmd: %s" % cmd)

- message = None

-

- return (sender["ip"], sender["broadcast_port"]), message

diff --git a/plugins/AnnounceLocal/Test/TestAnnounce.py b/plugins/AnnounceLocal/Test/TestAnnounce.py

deleted file mode 100644

index 4def02ed..00000000

--- a/plugins/AnnounceLocal/Test/TestAnnounce.py

+++ /dev/null

@@ -1,113 +0,0 @@

-import time

-import copy

-

-import gevent

-import pytest

-import mock

-

-from AnnounceLocal import AnnounceLocalPlugin

-from File import FileServer

-from Test import Spy

-

-@pytest.fixture

-def announcer(file_server, site):

- file_server.sites[site.address] = site

- announcer = AnnounceLocalPlugin.LocalAnnouncer(file_server, listen_port=1100)

- file_server.local_announcer = announcer

- announcer.listen_port = 1100

- announcer.sender_info["broadcast_port"] = 1100

- announcer.getMyIps = mock.MagicMock(return_value=["127.0.0.1"])

- announcer.discover = mock.MagicMock(return_value=False) # Don't send discover requests automatically

- gevent.spawn(announcer.start)

- time.sleep(0.5)

-

- assert file_server.local_announcer.running

- return file_server.local_announcer

-

-@pytest.fixture

-def announcer_remote(request, site_temp):

- file_server_remote = FileServer("127.0.0.1", 1545)

- file_server_remote.sites[site_temp.address] = site_temp

- announcer = AnnounceLocalPlugin.LocalAnnouncer(file_server_remote, listen_port=1101)

- file_server_remote.local_announcer = announcer

- announcer.listen_port = 1101

- announcer.sender_info["broadcast_port"] = 1101

- announcer.getMyIps = mock.MagicMock(return_value=["127.0.0.1"])

- announcer.discover = mock.MagicMock(return_value=False) # Don't send discover requests automatically

- gevent.spawn(announcer.start)

- time.sleep(0.5)

-

- assert file_server_remote.local_announcer.running

-

- def cleanup():

- file_server_remote.stop()

- request.addfinalizer(cleanup)

-

-

- return file_server_remote.local_announcer

-

-@pytest.mark.usefixtures("resetSettings")

-@pytest.mark.usefixtures("resetTempSettings")

-class TestAnnounce:

- def testSenderInfo(self, announcer):

- sender_info = announcer.sender_info

- assert sender_info["port"] > 0

- assert len(sender_info["peer_id"]) == 20

- assert sender_info["rev"] > 0

-

- def testIgnoreSelfMessages(self, announcer):

- # No response to messages that has same peer_id as server

- assert not announcer.handleMessage(("0.0.0.0", 123), {"cmd": "discoverRequest", "sender": announcer.sender_info, "params": {}})[1]

-

- # Response to messages with different peer id

- sender_info = copy.copy(announcer.sender_info)

- sender_info["peer_id"] += "-"

- addr, res = announcer.handleMessage(("0.0.0.0", 123), {"cmd": "discoverRequest", "sender": sender_info, "params": {}})

- assert res["params"]["sites_changed"] > 0

-

- def testDiscoverRequest(self, announcer, announcer_remote):

- assert len(announcer_remote.known_peers) == 0

- with Spy.Spy(announcer_remote, "handleMessage") as responses:

- announcer_remote.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer.listen_port)

- time.sleep(0.1)

-

- response_cmds = [response[1]["cmd"] for response in responses]

- assert response_cmds == ["discoverResponse", "siteListResponse"]

- assert len(responses[-1][1]["params"]["sites"]) == 1

-

- # It should only request siteList if sites_changed value is different from last response

- with Spy.Spy(announcer_remote, "handleMessage") as responses:

- announcer_remote.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer.listen_port)

- time.sleep(0.1)

-

- response_cmds = [response[1]["cmd"] for response in responses]

- assert response_cmds == ["discoverResponse"]

-

- def testPeerDiscover(self, announcer, announcer_remote, site):

- assert announcer.server.peer_id != announcer_remote.server.peer_id

- assert len(list(announcer.server.sites.values())[0].peers) == 0

- announcer.broadcast({"cmd": "discoverRequest"}, port=announcer_remote.listen_port)

- time.sleep(0.1)

- assert len(list(announcer.server.sites.values())[0].peers) == 1

-

- def testRecentPeerList(self, announcer, announcer_remote, site):

- assert len(site.peers_recent) == 0

- assert len(site.peers) == 0

- with Spy.Spy(announcer, "handleMessage") as responses:

- announcer.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer_remote.listen_port)

- time.sleep(0.1)

- assert [response[1]["cmd"] for response in responses] == ["discoverResponse", "siteListResponse"]

- assert len(site.peers_recent) == 1

- assert len(site.peers) == 1

-

- # It should update peer without siteListResponse

- last_time_found = list(site.peers.values())[0].time_found

- site.peers_recent.clear()

- with Spy.Spy(announcer, "handleMessage") as responses:

- announcer.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer_remote.listen_port)

- time.sleep(0.1)

- assert [response[1]["cmd"] for response in responses] == ["discoverResponse"]

- assert len(site.peers_recent) == 1

- assert list(site.peers.values())[0].time_found > last_time_found

-

-

diff --git a/plugins/AnnounceLocal/Test/conftest.py b/plugins/AnnounceLocal/Test/conftest.py

deleted file mode 100644

index a88c642c..00000000

--- a/plugins/AnnounceLocal/Test/conftest.py

+++ /dev/null

@@ -1,4 +0,0 @@

-from src.Test.conftest import *

-

-from Config import config

-config.broadcast_port = 0

diff --git a/plugins/AnnounceLocal/Test/pytest.ini b/plugins/AnnounceLocal/Test/pytest.ini

deleted file mode 100644

index d09210d1..00000000

--- a/plugins/AnnounceLocal/Test/pytest.ini

+++ /dev/null

@@ -1,5 +0,0 @@

-[pytest]

-python_files = Test*.py

-addopts = -rsxX -v --durations=6

-markers =

- webtest: mark a test as a webtest.

\ No newline at end of file

diff --git a/plugins/AnnounceLocal/__init__.py b/plugins/AnnounceLocal/__init__.py

deleted file mode 100644

index 5b80abd2..00000000

--- a/plugins/AnnounceLocal/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceLocalPlugin

\ No newline at end of file

diff --git a/plugins/AnnounceShare/AnnounceSharePlugin.py b/plugins/AnnounceShare/AnnounceSharePlugin.py

deleted file mode 100644

index 057ce55a..00000000

--- a/plugins/AnnounceShare/AnnounceSharePlugin.py

+++ /dev/null

@@ -1,190 +0,0 @@

-import time

-import os

-import logging

-import json

-import atexit

-

-import gevent

-

-from Config import config

-from Plugin import PluginManager

-from util import helper

-

-

-class TrackerStorage(object):

- def __init__(self):

- self.log = logging.getLogger("TrackerStorage")

- self.file_path = "%s/trackers.json" % config.data_dir

- self.load()

- self.time_discover = 0.0

- atexit.register(self.save)

-

- def getDefaultFile(self):

- return {"shared": {}}

-

- def onTrackerFound(self, tracker_address, type="shared", my=False):

- if not tracker_address.startswith("zero://"):

- return False

-

- trackers = self.getTrackers()

- added = False

- if tracker_address not in trackers:

- trackers[tracker_address] = {

- "time_added": time.time(),

- "time_success": 0,

- "latency": 99.0,

- "num_error": 0,

- "my": False

- }

- self.log.debug("New tracker found: %s" % tracker_address)

- added = True

-

- trackers[tracker_address]["time_found"] = time.time()

- trackers[tracker_address]["my"] = my

- return added

-

- def onTrackerSuccess(self, tracker_address, latency):

- trackers = self.getTrackers()

- if tracker_address not in trackers:

- return False

-

- trackers[tracker_address]["latency"] = latency

- trackers[tracker_address]["time_success"] = time.time()

- trackers[tracker_address]["num_error"] = 0

-

- def onTrackerError(self, tracker_address):

- trackers = self.getTrackers()

- if tracker_address not in trackers:

- return False

-

- trackers[tracker_address]["time_error"] = time.time()

- trackers[tracker_address]["num_error"] += 1

-

- if len(self.getWorkingTrackers()) >= config.working_shared_trackers_limit:

- error_limit = 5

- else:

- error_limit = 30

- error_limit

-

- if trackers[tracker_address]["num_error"] > error_limit and trackers[tracker_address]["time_success"] < time.time() - 60 * 60:

- self.log.debug("Tracker %s looks down, removing." % tracker_address)

- del trackers[tracker_address]

-

- def getTrackers(self, type="shared"):

- return self.file_content.setdefault(type, {})

-

- def getWorkingTrackers(self, type="shared"):

- trackers = {

- key: tracker for key, tracker in self.getTrackers(type).items()

- if tracker["time_success"] > time.time() - 60 * 60

- }

- return trackers

-

- def getFileContent(self):

- if not os.path.isfile(self.file_path):

- open(self.file_path, "w").write("{}")

- return self.getDefaultFile()

- try:

- return json.load(open(self.file_path))

- except Exception as err:

- self.log.error("Error loading trackers list: %s" % err)

- return self.getDefaultFile()

-

- def load(self):

- self.file_content = self.getFileContent()

-

- trackers = self.getTrackers()

- self.log.debug("Loaded %s shared trackers" % len(trackers))

- for address, tracker in list(trackers.items()):

- tracker["num_error"] = 0

- if not address.startswith("zero://"):

- del trackers[address]

-

- def save(self):

- s = time.time()

- helper.atomicWrite(self.file_path, json.dumps(self.file_content, indent=2, sort_keys=True).encode("utf8"))

- self.log.debug("Saved in %.3fs" % (time.time() - s))

-

- def discoverTrackers(self, peers):

- if len(self.getWorkingTrackers()) > config.working_shared_trackers_limit:

- return False

- s = time.time()

- num_success = 0

- for peer in peers:

- if peer.connection and peer.connection.handshake.get("rev", 0) < 3560:

- continue # Not supported

-

- res = peer.request("getTrackers")

- if not res or "error" in res:

- continue

-

- num_success += 1

- for tracker_address in res["trackers"]:

- if type(tracker_address) is bytes: # Backward compatibilitys

- tracker_address = tracker_address.decode("utf8")

- added = self.onTrackerFound(tracker_address)

- if added: # Only add one tracker from one source

- break

-

- if not num_success and len(peers) < 20:

- self.time_discover = 0.0

-

- if num_success:

- self.save()

-

- self.log.debug("Trackers discovered from %s/%s peers in %.3fs" % (num_success, len(peers), time.time() - s))

-

-

-if "tracker_storage" not in locals():

- tracker_storage = TrackerStorage()

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def getTrackers(self):

- if tracker_storage.time_discover < time.time() - 5 * 60:

- tracker_storage.time_discover = time.time()

- gevent.spawn(tracker_storage.discoverTrackers, self.site.getConnectedPeers())

- trackers = super(SiteAnnouncerPlugin, self).getTrackers()

- shared_trackers = list(tracker_storage.getTrackers("shared").keys())

- if shared_trackers:

- return trackers + shared_trackers

- else:

- return trackers

-

- def announceTracker(self, tracker, *args, **kwargs):

- res = super(SiteAnnouncerPlugin, self).announceTracker(tracker, *args, **kwargs)

- if res:

- latency = res

- tracker_storage.onTrackerSuccess(tracker, latency)

- elif res is False:

- tracker_storage.onTrackerError(tracker)

-

- return res

-

-

-@PluginManager.registerTo("FileRequest")

-class FileRequestPlugin(object):

- def actionGetTrackers(self, params):

- shared_trackers = list(tracker_storage.getWorkingTrackers("shared").keys())

- self.response({"trackers": shared_trackers})

-

-

-@PluginManager.registerTo("FileServer")

-class FileServerPlugin(object):

- def portCheck(self, *args, **kwargs):

- res = super(FileServerPlugin, self).portCheck(*args, **kwargs)

- if res and not config.tor == "always" and "Bootstrapper" in PluginManager.plugin_manager.plugin_names:

- for ip in self.ip_external_list:

- my_tracker_address = "zero://%s:%s" % (ip, config.fileserver_port)

- tracker_storage.onTrackerFound(my_tracker_address, my=True)

- return res

-

-

-@PluginManager.registerTo("ConfigPlugin")

-class ConfigPlugin(object):

- def createArguments(self):

- group = self.parser.add_argument_group("AnnounceShare plugin")

- group.add_argument('--working_shared_trackers_limit', help='Stop discovering new shared trackers after this number of shared trackers reached', default=5, type=int, metavar='limit')

-

- return super(ConfigPlugin, self).createArguments()

diff --git a/plugins/AnnounceShare/Test/TestAnnounceShare.py b/plugins/AnnounceShare/Test/TestAnnounceShare.py

deleted file mode 100644

index 7178eac8..00000000

--- a/plugins/AnnounceShare/Test/TestAnnounceShare.py

+++ /dev/null

@@ -1,24 +0,0 @@

-import pytest

-

-from AnnounceShare import AnnounceSharePlugin

-from Peer import Peer

-from Config import config

-

-

-@pytest.mark.usefixtures("resetSettings")

-@pytest.mark.usefixtures("resetTempSettings")

-class TestAnnounceShare:

- def testAnnounceList(self, file_server):

- open("%s/trackers.json" % config.data_dir, "w").write("{}")

- tracker_storage = AnnounceSharePlugin.tracker_storage

- tracker_storage.load()

- peer = Peer(file_server.ip, 1544, connection_server=file_server)

- assert peer.request("getTrackers")["trackers"] == []

-

- tracker_storage.onTrackerFound("zero://%s:15441" % file_server.ip)

- assert peer.request("getTrackers")["trackers"] == []

-

- # It needs to have at least one successfull announce to be shared to other peers

- tracker_storage.onTrackerSuccess("zero://%s:15441" % file_server.ip, 1.0)

- assert peer.request("getTrackers")["trackers"] == ["zero://%s:15441" % file_server.ip]

-

diff --git a/plugins/AnnounceShare/Test/conftest.py b/plugins/AnnounceShare/Test/conftest.py

deleted file mode 100644

index 5abd4dd6..00000000

--- a/plugins/AnnounceShare/Test/conftest.py

+++ /dev/null

@@ -1,3 +0,0 @@

-from src.Test.conftest import *

-

-from Config import config

diff --git a/plugins/AnnounceShare/Test/pytest.ini b/plugins/AnnounceShare/Test/pytest.ini

deleted file mode 100644

index d09210d1..00000000

--- a/plugins/AnnounceShare/Test/pytest.ini

+++ /dev/null

@@ -1,5 +0,0 @@

-[pytest]

-python_files = Test*.py

-addopts = -rsxX -v --durations=6

-markers =

- webtest: mark a test as a webtest.

\ No newline at end of file

diff --git a/plugins/AnnounceShare/__init__.py b/plugins/AnnounceShare/__init__.py

deleted file mode 100644

index dc1e40bd..00000000

--- a/plugins/AnnounceShare/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceSharePlugin

diff --git a/plugins/AnnounceZero/AnnounceZeroPlugin.py b/plugins/AnnounceZero/AnnounceZeroPlugin.py

deleted file mode 100644

index dcaa04f0..00000000

--- a/plugins/AnnounceZero/AnnounceZeroPlugin.py

+++ /dev/null

@@ -1,138 +0,0 @@

-import time

-import itertools

-

-from Plugin import PluginManager

-from util import helper

-from Crypt import CryptRsa

-

-allow_reload = False # No source reload supported in this plugin

-time_full_announced = {} # Tracker address: Last announced all site to tracker

-connection_pool = {} # Tracker address: Peer object

-

-

-# We can only import plugin host clases after the plugins are loaded

-@PluginManager.afterLoad

-def importHostClasses():

- global Peer, AnnounceError

- from Peer import Peer

- from Site.SiteAnnouncer import AnnounceError

-

-

-# Process result got back from tracker

-def processPeerRes(tracker_address, site, peers):

- added = 0

- # Ip4

- found_ipv4 = 0

- peers_normal = itertools.chain(peers.get("ip4", []), peers.get("ipv4", []), peers.get("ipv6", []))

- for packed_address in peers_normal:

- found_ipv4 += 1

- peer_ip, peer_port = helper.unpackAddress(packed_address)

- if site.addPeer(peer_ip, peer_port, source="tracker"):

- added += 1

- # Onion

- found_onion = 0

- for packed_address in peers["onion"]:

- found_onion += 1

- peer_onion, peer_port = helper.unpackOnionAddress(packed_address)

- if site.addPeer(peer_onion, peer_port, source="tracker"):

- added += 1

-

- if added:

- site.worker_manager.onPeers()

- site.updateWebsocket(peers_added=added)

- return added

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def getTrackerHandler(self, protocol):

- if protocol == "zero":

- return self.announceTrackerZero

- else:

- return super(SiteAnnouncerPlugin, self).getTrackerHandler(protocol)

-

- def announceTrackerZero(self, tracker_address, mode="start", num_want=10):

- global time_full_announced

- s = time.time()

-

- need_types = ["ip4"] # ip4 for backward compatibility reasons

- need_types += self.site.connection_server.supported_ip_types

- if self.site.connection_server.tor_manager.enabled:

- need_types.append("onion")

-

- if mode == "start" or mode == "more": # Single: Announce only this site

- sites = [self.site]

- full_announce = False

- else: # Multi: Announce all currently serving site

- full_announce = True

- if time.time() - time_full_announced.get(tracker_address, 0) < 60 * 15: # No reannounce all sites within short time

- return None

- time_full_announced[tracker_address] = time.time()

- from Site import SiteManager

- sites = [site for site in SiteManager.site_manager.sites.values() if site.isServing()]

-

- # Create request

- add_types = self.getOpenedServiceTypes()

- request = {

- "hashes": [], "onions": [], "port": self.fileserver_port, "need_types": need_types, "need_num": 20, "add": add_types

- }

- for site in sites:

- if "onion" in add_types:

- onion = self.site.connection_server.tor_manager.getOnion(site.address)

- request["onions"].append(onion)

- request["hashes"].append(site.address_hash)

-

- # Tracker can remove sites that we don't announce

- if full_announce:

- request["delete"] = True

-

- # Sent request to tracker

- tracker_peer = connection_pool.get(tracker_address) # Re-use tracker connection if possible

- if not tracker_peer:

- tracker_ip, tracker_port = tracker_address.rsplit(":", 1)

- tracker_peer = Peer(str(tracker_ip), int(tracker_port), connection_server=self.site.connection_server)

- tracker_peer.is_tracker_connection = True

- connection_pool[tracker_address] = tracker_peer

-

- res = tracker_peer.request("announce", request)

-

- if not res or "peers" not in res:

- if full_announce:

- time_full_announced[tracker_address] = 0

- raise AnnounceError("Invalid response: %s" % res)

-

- # Add peers from response to site

- site_index = 0

- peers_added = 0

- for site_res in res["peers"]:

- site = sites[site_index]

- peers_added += processPeerRes(tracker_address, site, site_res)

- site_index += 1

-

- # Check if we need to sign prove the onion addresses

- if "onion_sign_this" in res:

- self.site.log.debug("Signing %s for %s to add %s onions" % (res["onion_sign_this"], tracker_address, len(sites)))

- request["onion_signs"] = {}

- request["onion_sign_this"] = res["onion_sign_this"]

- request["need_num"] = 0

- for site in sites:

- onion = self.site.connection_server.tor_manager.getOnion(site.address)

- publickey = self.site.connection_server.tor_manager.getPublickey(onion)

- if publickey not in request["onion_signs"]:

- sign = CryptRsa.sign(res["onion_sign_this"].encode("utf8"), self.site.connection_server.tor_manager.getPrivatekey(onion))

- request["onion_signs"][publickey] = sign

- res = tracker_peer.request("announce", request)

- if not res or "onion_sign_this" in res:

- if full_announce:

- time_full_announced[tracker_address] = 0

- raise AnnounceError("Announce onion address to failed: %s" % res)

-

- if full_announce:

- tracker_peer.remove() # Close connection, we don't need it in next 5 minute

-

- self.site.log.debug(

- "Tracker announce result: zero://%s (sites: %s, new peers: %s, add: %s) in %.3fs" %

- (tracker_address, site_index, peers_added, add_types, time.time() - s)

- )

-

- return True

diff --git a/plugins/AnnounceZero/__init__.py b/plugins/AnnounceZero/__init__.py

deleted file mode 100644

index 8aec5ddb..00000000

--- a/plugins/AnnounceZero/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceZeroPlugin

\ No newline at end of file

diff --git a/plugins/Bigfile/BigfilePiecefield.py b/plugins/Bigfile/BigfilePiecefield.py

deleted file mode 100644

index ee770573..00000000

--- a/plugins/Bigfile/BigfilePiecefield.py

+++ /dev/null

@@ -1,164 +0,0 @@

-import array

-

-

-def packPiecefield(data):

- assert isinstance(data, bytes) or isinstance(data, bytearray)

- res = []

- if not data:

- return array.array("H", b"")

-

- if data[0] == b"\x00":

- res.append(0)

- find = b"\x01"

- else:

- find = b"\x00"

- last_pos = 0

- pos = 0

- while 1:

- pos = data.find(find, pos)

- if find == b"\x00":

- find = b"\x01"

- else:

- find = b"\x00"

- if pos == -1:

- res.append(len(data) - last_pos)

- break

- res.append(pos - last_pos)

- last_pos = pos

- return array.array("H", res)

-

-

-def unpackPiecefield(data):

- if not data:

- return b""

-

- res = []

- char = b"\x01"

- for times in data:

- if times > 10000:

- return b""

- res.append(char * times)

- if char == b"\x01":

- char = b"\x00"

- else:

- char = b"\x01"

- return b"".join(res)

-

-

-def spliceBit(data, idx, bit):

- assert bit == b"\x00" or bit == b"\x01"

- if len(data) < idx:

- data = data.ljust(idx + 1, b"\x00")

- return data[:idx] + bit + data[idx+ 1:]

-

-class Piecefield(object):

- def tostring(self):

- return "".join(["1" if b else "0" for b in self.tobytes()])

-

-

-class BigfilePiecefield(Piecefield):

- __slots__ = ["data"]

-

- def __init__(self):

- self.data = b""

-

- def frombytes(self, s):

- assert isinstance(s, bytes) or isinstance(s, bytearray)

- self.data = s

-

- def tobytes(self):

- return self.data

-

- def pack(self):

- return packPiecefield(self.data).tobytes()

-

- def unpack(self, s):

- self.data = unpackPiecefield(array.array("H", s))

-

- def __getitem__(self, key):

- try:

- return self.data[key]

- except IndexError:

- return False

-

- def __setitem__(self, key, value):

- self.data = spliceBit(self.data, key, value)

-

-class BigfilePiecefieldPacked(Piecefield):

- __slots__ = ["data"]

-

- def __init__(self):

- self.data = b""

-

- def frombytes(self, data):

- assert isinstance(data, bytes) or isinstance(data, bytearray)

- self.data = packPiecefield(data).tobytes()

-

- def tobytes(self):

- return unpackPiecefield(array.array("H", self.data))

-

- def pack(self):

- return array.array("H", self.data).tobytes()

-

- def unpack(self, data):

- self.data = data

-

- def __getitem__(self, key):

- try:

- return self.tobytes()[key]

- except IndexError:

- return False

-

- def __setitem__(self, key, value):

- data = spliceBit(self.tobytes(), key, value)

- self.frombytes(data)

-

-

-if __name__ == "__main__":

- import os

- import psutil

- import time

- testdata = b"\x01" * 100 + b"\x00" * 900 + b"\x01" * 4000 + b"\x00" * 4999 + b"\x01"

- meminfo = psutil.Process(os.getpid()).memory_info

-

- for storage in [BigfilePiecefieldPacked, BigfilePiecefield]:

- print("-- Testing storage: %s --" % storage)

- m = meminfo()[0]

- s = time.time()

- piecefields = {}

- for i in range(10000):

- piecefield = storage()

- piecefield.frombytes(testdata[:i] + b"\x00" + testdata[i + 1:])

- piecefields[i] = piecefield

-

- print("Create x10000: +%sKB in %.3fs (len: %s)" % ((meminfo()[0] - m) / 1024, time.time() - s, len(piecefields[0].data)))

-

- m = meminfo()[0]

- s = time.time()

- for piecefield in list(piecefields.values()):

- val = piecefield[1000]

-

- print("Query one x10000: +%sKB in %.3fs" % ((meminfo()[0] - m) / 1024, time.time() - s))

-

- m = meminfo()[0]

- s = time.time()

- for piecefield in list(piecefields.values()):

- piecefield[1000] = b"\x01"

-

- print("Change one x10000: +%sKB in %.3fs" % ((meminfo()[0] - m) / 1024, time.time() - s))

-

- m = meminfo()[0]

- s = time.time()

- for piecefield in list(piecefields.values()):

- packed = piecefield.pack()

-

- print("Pack x10000: +%sKB in %.3fs (len: %s)" % ((meminfo()[0] - m) / 1024, time.time() - s, len(packed)))

-

- m = meminfo()[0]

- s = time.time()

- for piecefield in list(piecefields.values()):

- piecefield.unpack(packed)

-

- print("Unpack x10000: +%sKB in %.3fs (len: %s)" % ((meminfo()[0] - m) / 1024, time.time() - s, len(piecefields[0].data)))

-

- piecefields = {}

diff --git a/plugins/Bigfile/BigfilePlugin.py b/plugins/Bigfile/BigfilePlugin.py

deleted file mode 100644

index 03a0f44f..00000000

--- a/plugins/Bigfile/BigfilePlugin.py

+++ /dev/null

@@ -1,784 +0,0 @@

-import time

-import os

-import subprocess

-import shutil

-import collections

-import math

-import warnings

-import base64

-import binascii

-import json

-

-import gevent

-import gevent.lock

-

-from Plugin import PluginManager

-from Debug import Debug

-from Crypt import CryptHash

-with warnings.catch_warnings():

- warnings.filterwarnings("ignore") # Ignore missing sha3 warning

- import merkletools

-

-from util import helper

-from util import Msgpack

-import util

-from .BigfilePiecefield import BigfilePiecefield, BigfilePiecefieldPacked

-

-

-# We can only import plugin host clases after the plugins are loaded

-@PluginManager.afterLoad

-def importPluginnedClasses():

- global VerifyError, config

- from Content.ContentManager import VerifyError

- from Config import config

-

-if "upload_nonces" not in locals():

- upload_nonces = {}

-

-

-@PluginManager.registerTo("UiRequest")

-class UiRequestPlugin(object):

- def isCorsAllowed(self, path):

- if path == "/ZeroNet-Internal/BigfileUpload":

- return True

- else:

- return super(UiRequestPlugin, self).isCorsAllowed(path)

-

- @helper.encodeResponse

- def actionBigfileUpload(self):

- nonce = self.get.get("upload_nonce")

- if nonce not in upload_nonces:

- return self.error403("Upload nonce error.")

-

- upload_info = upload_nonces[nonce]

- del upload_nonces[nonce]

-

- self.sendHeader(200, "text/html", noscript=True, extra_headers={

- "Access-Control-Allow-Origin": "null",

- "Access-Control-Allow-Credentials": "true"

- })

-

- self.readMultipartHeaders(self.env['wsgi.input']) # Skip http headers

-

- site = upload_info["site"]

- inner_path = upload_info["inner_path"]

-

- with site.storage.open(inner_path, "wb", create_dirs=True) as out_file:

- merkle_root, piece_size, piecemap_info = site.content_manager.hashBigfile(

- self.env['wsgi.input'], upload_info["size"], upload_info["piece_size"], out_file

- )

-

- if len(piecemap_info["sha512_pieces"]) == 1: # Small file, don't split

- hash = binascii.hexlify(piecemap_info["sha512_pieces"][0])

- hash_id = site.content_manager.hashfield.getHashId(hash)

- site.content_manager.optionalDownloaded(inner_path, hash_id, upload_info["size"], own=True)

-

- else: # Big file

- file_name = helper.getFilename(inner_path)

- site.storage.open(upload_info["piecemap"], "wb").write(Msgpack.pack({file_name: piecemap_info}))

-

- # Find piecemap and file relative path to content.json

- file_info = site.content_manager.getFileInfo(inner_path, new_file=True)

- content_inner_path_dir = helper.getDirname(file_info["content_inner_path"])

- piecemap_relative_path = upload_info["piecemap"][len(content_inner_path_dir):]

- file_relative_path = inner_path[len(content_inner_path_dir):]

-

- # Add file to content.json

- if site.storage.isFile(file_info["content_inner_path"]):

- content = site.storage.loadJson(file_info["content_inner_path"])

- else:

- content = {}

- if "files_optional" not in content:

- content["files_optional"] = {}

-

- content["files_optional"][file_relative_path] = {

- "sha512": merkle_root,

- "size": upload_info["size"],

- "piecemap": piecemap_relative_path,

- "piece_size": piece_size

- }

-

- merkle_root_hash_id = site.content_manager.hashfield.getHashId(merkle_root)

- site.content_manager.optionalDownloaded(inner_path, merkle_root_hash_id, upload_info["size"], own=True)

- site.storage.writeJson(file_info["content_inner_path"], content)

-

- site.content_manager.contents.loadItem(file_info["content_inner_path"]) # reload cache

-

- return json.dumps({

- "merkle_root": merkle_root,

- "piece_num": len(piecemap_info["sha512_pieces"]),

- "piece_size": piece_size,

- "inner_path": inner_path

- })

-

- def readMultipartHeaders(self, wsgi_input):

- found = False

- for i in range(100):

- line = wsgi_input.readline()

- if line == b"\r\n":

- found = True

- break

- if not found:

- raise Exception("No multipart header found")

- return i

-

- def actionFile(self, file_path, *args, **kwargs):

- if kwargs.get("file_size", 0) > 1024 * 1024 and kwargs.get("path_parts"): # Only check files larger than 1MB

- path_parts = kwargs["path_parts"]

- site = self.server.site_manager.get(path_parts["address"])

- big_file = site.storage.openBigfile(path_parts["inner_path"], prebuffer=2 * 1024 * 1024)

- if big_file:

- kwargs["file_obj"] = big_file

- kwargs["file_size"] = big_file.size

-

- return super(UiRequestPlugin, self).actionFile(file_path, *args, **kwargs)

-

-

-@PluginManager.registerTo("UiWebsocket")

-class UiWebsocketPlugin(object):

- def actionBigfileUploadInit(self, to, inner_path, size):

- valid_signers = self.site.content_manager.getValidSigners(inner_path)

- auth_address = self.user.getAuthAddress(self.site.address)

- if not self.site.settings["own"] and auth_address not in valid_signers:

- self.log.error("FileWrite forbidden %s not in valid_signers %s" % (auth_address, valid_signers))

- return self.response(to, {"error": "Forbidden, you can only modify your own files"})

-

- nonce = CryptHash.random()

- piece_size = 1024 * 1024

- inner_path = self.site.content_manager.sanitizePath(inner_path)

- file_info = self.site.content_manager.getFileInfo(inner_path, new_file=True)

-

- content_inner_path_dir = helper.getDirname(file_info["content_inner_path"])

- file_relative_path = inner_path[len(content_inner_path_dir):]

-

- upload_nonces[nonce] = {

- "added": time.time(),

- "site": self.site,

- "inner_path": inner_path,

- "websocket_client": self,

- "size": size,

- "piece_size": piece_size,

- "piecemap": inner_path + ".piecemap.msgpack"

- }

- return {

- "url": "/ZeroNet-Internal/BigfileUpload?upload_nonce=" + nonce,

- "piece_size": piece_size,

- "inner_path": inner_path,

- "file_relative_path": file_relative_path

- }

-

- def actionSiteSetAutodownloadBigfileLimit(self, to, limit):

- permissions = self.getPermissions(to)

- if "ADMIN" not in permissions:

- return self.response(to, "You don't have permission to run this command")

-

- self.site.settings["autodownload_bigfile_size_limit"] = int(limit)

- self.response(to, "ok")

-

- def actionFileDelete(self, to, inner_path):

- piecemap_inner_path = inner_path + ".piecemap.msgpack"

- if self.hasFilePermission(inner_path) and self.site.storage.isFile(piecemap_inner_path):

- # Also delete .piecemap.msgpack file if exists

- self.log.debug("Deleting piecemap: %s" % piecemap_inner_path)

- file_info = self.site.content_manager.getFileInfo(piecemap_inner_path)

- if file_info:

- content_json = self.site.storage.loadJson(file_info["content_inner_path"])

- relative_path = file_info["relative_path"]

- if relative_path in content_json.get("files_optional", {}):

- del content_json["files_optional"][relative_path]

- self.site.storage.writeJson(file_info["content_inner_path"], content_json)

- self.site.content_manager.loadContent(file_info["content_inner_path"], add_bad_files=False, force=True)

- try:

- self.site.storage.delete(piecemap_inner_path)

- except Exception as err:

- self.log.error("File %s delete error: %s" % (piecemap_inner_path, err))

-

- return super(UiWebsocketPlugin, self).actionFileDelete(to, inner_path)

-

-

-@PluginManager.registerTo("ContentManager")

-class ContentManagerPlugin(object):

- def getFileInfo(self, inner_path, *args, **kwargs):

- if "|" not in inner_path:

- return super(ContentManagerPlugin, self).getFileInfo(inner_path, *args, **kwargs)

-

- inner_path, file_range = inner_path.split("|")

- pos_from, pos_to = map(int, file_range.split("-"))

- file_info = super(ContentManagerPlugin, self).getFileInfo(inner_path, *args, **kwargs)

- return file_info

-

- def readFile(self, file_in, size, buff_size=1024 * 64):

- part_num = 0

- recv_left = size

-

- while 1:

- part_num += 1

- read_size = min(buff_size, recv_left)

- part = file_in.read(read_size)

-

- if not part:

- break

- yield part

-

- if part_num % 100 == 0: # Avoid blocking ZeroNet execution during upload

- time.sleep(0.001)

-

- recv_left -= read_size

- if recv_left <= 0:

- break

-

- def hashBigfile(self, file_in, size, piece_size=1024 * 1024, file_out=None):

- self.site.settings["has_bigfile"] = True

-

- recv = 0

- try:

- piece_hash = CryptHash.sha512t()

- piece_hashes = []

- piece_recv = 0

-

- mt = merkletools.MerkleTools()

- mt.hash_function = CryptHash.sha512t

-

- part = ""

- for part in self.readFile(file_in, size):

- if file_out:

- file_out.write(part)

-

- recv += len(part)

- piece_recv += len(part)

- piece_hash.update(part)

- if piece_recv >= piece_size:

- piece_digest = piece_hash.digest()

- piece_hashes.append(piece_digest)

- mt.leaves.append(piece_digest)

- piece_hash = CryptHash.sha512t()

- piece_recv = 0

-

- if len(piece_hashes) % 100 == 0 or recv == size:

- self.log.info("- [HASHING:%.0f%%] Pieces: %s, %.1fMB/%.1fMB" % (

- float(recv) / size * 100, len(piece_hashes), recv / 1024 / 1024, size / 1024 / 1024

- ))

- part = ""

- if len(part) > 0:

- piece_digest = piece_hash.digest()

- piece_hashes.append(piece_digest)

- mt.leaves.append(piece_digest)

- except Exception as err:

- raise err

- finally:

- if file_out:

- file_out.close()

-

- mt.make_tree()

- merkle_root = mt.get_merkle_root()

- if type(merkle_root) is bytes: # Python <3.5

- merkle_root = merkle_root.decode()

- return merkle_root, piece_size, {

- "sha512_pieces": piece_hashes

- }

-

- def hashFile(self, dir_inner_path, file_relative_path, optional=False):

- inner_path = dir_inner_path + file_relative_path

-

- file_size = self.site.storage.getSize(inner_path)

- # Only care about optional files >1MB

- if not optional or file_size < 1 * 1024 * 1024:

- return super(ContentManagerPlugin, self).hashFile(dir_inner_path, file_relative_path, optional)

-

- back = {}

- content = self.contents.get(dir_inner_path + "content.json")

-

- hash = None

- piecemap_relative_path = None

- piece_size = None

-

- # Don't re-hash if it's already in content.json

- if content and file_relative_path in content.get("files_optional", {}):

- file_node = content["files_optional"][file_relative_path]

- if file_node["size"] == file_size:

- self.log.info("- [SAME SIZE] %s" % file_relative_path)

- hash = file_node.get("sha512")

- piecemap_relative_path = file_node.get("piecemap")

- piece_size = file_node.get("piece_size")

-

- if not hash or not piecemap_relative_path: # Not in content.json yet

- if file_size < 5 * 1024 * 1024: # Don't create piecemap automatically for files smaller than 5MB

- return super(ContentManagerPlugin, self).hashFile(dir_inner_path, file_relative_path, optional)

-

- self.log.info("- [HASHING] %s" % file_relative_path)

- merkle_root, piece_size, piecemap_info = self.hashBigfile(self.site.storage.open(inner_path, "rb"), file_size)

- if not hash:

- hash = merkle_root

-

- if not piecemap_relative_path:

- file_name = helper.getFilename(file_relative_path)

- piecemap_relative_path = file_relative_path + ".piecemap.msgpack"

- piecemap_inner_path = inner_path + ".piecemap.msgpack"

-

- self.site.storage.open(piecemap_inner_path, "wb").write(Msgpack.pack({file_name: piecemap_info}))

-

- back.update(super(ContentManagerPlugin, self).hashFile(dir_inner_path, piecemap_relative_path, optional=True))

-

- piece_num = int(math.ceil(float(file_size) / piece_size))

-

- # Add the merkle root to hashfield

- hash_id = self.site.content_manager.hashfield.getHashId(hash)

- self.optionalDownloaded(inner_path, hash_id, file_size, own=True)

- self.site.storage.piecefields[hash].frombytes(b"\x01" * piece_num)

-